============================== \title{ Simulating 500 million years of evolution with a language model

\author{ Thomas Hayes ${ }^{1 *}$ Roshan Rao $^{1 *}$ Halil Akin ${ }^{1 *}$ Nicholas James Sofroniew ${ }^{1 *}$ Deniz Oktay ${ }^{1 *}$ Zeming Lin $^{1 *}$ \ Robert Verkuil ${ }^{1 *}$ Vincent Quy Tran ${ }^{23}$ Jonathan Deaton ${ }^{1}$ Marius Wiggert ${ }^{1}$ Rohil Badkundri ${ }^{1}$ \ Irhum Shafkat ${ }^{1}$ Jun Gong ${ }^{1}$ Alexander Derry ${ }^{1}$ Raul Santiago Molina ${ }^{1}$ Neil Thomas ${ }^{1}$ Yousuf Khan ${ }^{4}$ \ Chetan Mishra ${ }^{1}$ Carolyn Kim ${ }^{1}$ Liam J. Bartie ${ }^{2}$ Patrick D. Hsu ${ }^{23}$ Tom Sercu ${ }^{1}$ Salvatore Candido ${ }^{1}$ \ Alexander Rives ${ }^{1 \dagger}$

\begin{abstract} More than three billion years of evolution have produced an image of biology encoded into the space of natural proteins. Here we show that language models trained on tokens generated by evolution can act as evolutionary simulators to generate functional proteins that are far away from known proteins. We present ESM3, a frontier multimodal generative language model that reasons over the sequence, structure, and function of proteins. ESM3 can follow complex prompts combining its modalities and is highly responsive to biological alignment. We have prompted ESM3 to generate fluorescent proteins with a chain of thought. Among the generations that we synthesized, we found a bright fluorescent protein at far distance ( $58 \%$ identity) from known fluorescent proteins. Similarly distant natural fluorescent proteins are separated by over five hundred million years of evolution.

The article discusses the use of language models to generate functional proteins that are different from known proteins. The authors present a new model called ESM3, which can reason over the sequence, structure, and function of proteins. They used ESM3 to generate fluorescent proteins and found a bright fluorescent protein that is far away from known fluorescent proteins. This suggests that the model can be used to create new proteins that are not found in nature. The authors also note that similar natural fluorescent proteins are separated by over five hundred million years of evolution.

As a result, the patterns in the proteins we observe reflect the action of the deep hidden variables of the biology that have shaped their evolution across time. Gene sequencing surveys \footnotetext{

The patterns in proteins that we observe are a reflection of the deep hidden variables of biology that have shaped their evolution over time. This is because gene sequencing surveys allow us to study the genetic makeup of organisms and how it has changed over time. By analyzing the patterns in these sequences, we can gain insights into the evolutionary history of different species and the factors that have influenced their development. This information can be used to better understand the biology of these organisms and to develop new treatments and therapies for a variety of diseases.

Preview 2024-06-25. Pending submission to bioRxiv. Copyright 2024 by the authors.

The development and evaluation of language models for protein sequences have shown that these models can accurately represent the biological structure and function of proteins without any supervision. As the scale of the models increases, their capabilities also improve. This phenomenon is known as scaling laws, which have been observed in artificial intelligence and describe the growth in capabilities with increasing scale in terms of compute, parameters, and data.

ESM3 is a cutting-edge generative model that is capable of analyzing and generating sequences, structures, and functions of proteins. It is trained as a generative masked language model, which means that it can generate new protein sequences based on the patterns it has learned from existing data.

One of the key features of ESM3 is its ability to reason over the three-dimensional atomic structure of proteins. This is achieved by encoding the structure as discrete tokens, rather than using complex architectures that require diffusion in three-dimensional space. This approach allows ESM3 to be more scalable and efficient, while still providing accurate and detailed analysis of protein structures.

Another important aspect of ESM3 is its ability to model all-to-all combinations of its modalities. This means that it can be prompted with any combination of sequence, structure, and function data, and generate new proteins that respect those prompts. This makes ESM3 a powerful tool for protein engineering and design, as it allows researchers to generate new proteins with specific properties and functions.

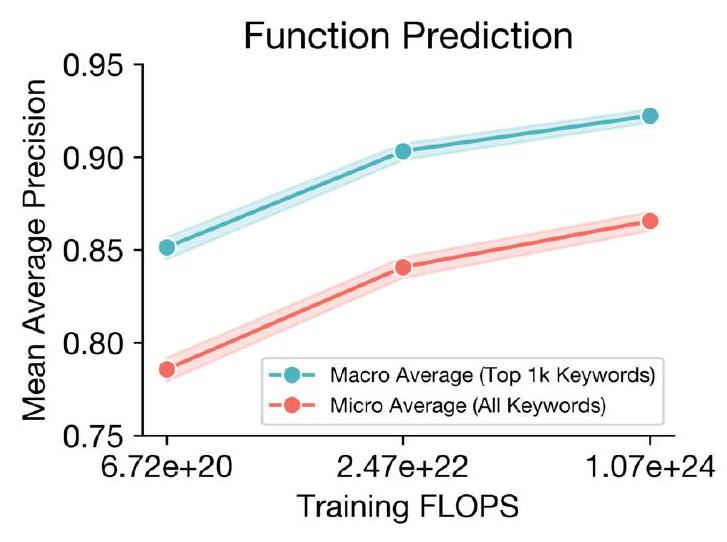

ESM3 at its largest scale was trained with $1.07 \times 10^{24}$ FLOPs on 2.78 billion proteins and 771 billion unique tokens, and has 98 billion parameters. Scaling ESM3 to this 98 billion parameter size results in improvements in the representation of sequence, structure, and function, as well as on generative evaluations. We find that ESM3 is highly responsive to prompts, and finds creative solutions to complex combinations of prompts, including solutions for which we can find no matching structure in nature. We find that models at all scales can be aligned to better follow prompts. Larger models are far more responsive to alignment, and

The ESM3 model was trained using a massive amount of computational power, with $1.07 \times 10^{24}$ FLOPs and 2.78 billion proteins and 771 billion unique tokens. This resulted in a model with 98 billion parameters, which is significantly larger than previous models. The larger size of the model allowed for improvements in the representation of sequence, structure, and function, as well as on generative evaluations.

One interesting finding is that the ESM3 model is highly responsive to prompts, meaning that it can generate creative solutions to complex combinations of prompts. This includes solutions that do not exist in nature. Additionally, the model can be aligned to better follow prompts, with larger models being more responsive to alignment and capable of solving harder prompts after alignment.

Overall, the ESM3 model represents a significant advancement in the field of protein modeling and has the potential to greatly improve our understanding of protein structure and function.

The article discusses the creation of a new green fluorescent protein (GFP) called ESM3. GFPs are proteins that emit fluorescent light and are commonly found in jellyfish and corals. They are widely used in biotechnology as tools for imaging and tracking biological processes. The structure of GFPs is unique, consisting of an eleven-stranded beta barrel with a helix that runs through the center. This structure allows for the formation of a light-emitting chromophore, which is made up of the protein's own atoms. This mechanism is not found in any other protein, indicating that producing fluorescence is a difficult process even for nature.

The statement suggests that the researchers have discovered a new protein called esmGFP, which has a sequence identity of 36% with Aequorea victoria GFP and 58% with the most similar known fluorescent protein. The researchers claim that despite the intense focus on GFP as a target for protein engineering over several decades, proteins this distant have only been found through the discovery of new GFPs in nature. This implies that the discovery of esmGFP is significant and could potentially lead to new insights and applications in protein engineering.

ESM3 is a machine learning model that is designed to analyze and understand the sequence, structure, and function of proteins. It uses a combination of three modalities, which are represented by tokens, to achieve this goal. These modalities are input and output as separate tracks, which are then fused together into a single latent space within the model.

The sequence modality refers to the linear sequence of amino acids that make up a protein. The structure modality refers to the three-dimensional structure of the protein, which is determined by the interactions between the amino acids. The function modality refers to the biological role that the protein plays in the body.

ESM3 is trained using a generative masked language modeling objective, which means that it is able to generate new sequences of amino acids based on the patterns it has learned from the input data. This allows it to make predictions about the structure and function of proteins based on their sequence.

Overall, ESM3 is a powerful tool for understanding the complex world of proteins, and it has the potential to revolutionize the field of protein science.

$$ \mathcal{L}=-\mathbb{E}{x, m}\left[\frac{1}{|m|} \sum{i \in m} \log p\left(x{i} \mid x{\backslash m}\right)\right]

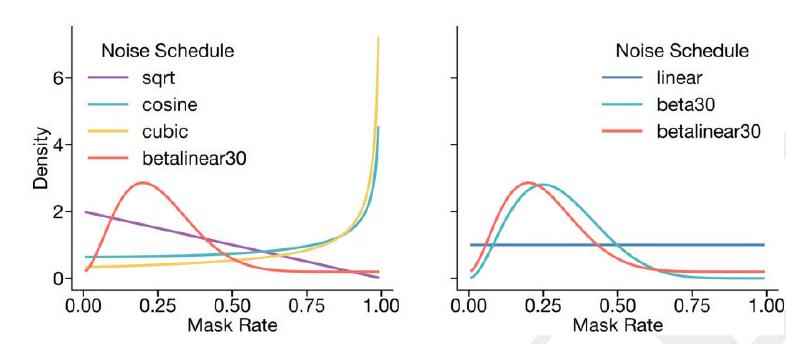

A random mask $m$ is applied to the tokens $x$ describing the protein, and the model is supervised to predict the identity of the tokens that have been masked. During training, the mask is sampled from a noise schedule so that ESM3 sees many different combinations of masked sequence, structure, and function, and predicts completions of any combination of the modalities from any other. This differs from the classical masked language modeling (28) in that the supervision is applied across all possible masking rates rather than a single fixed masking rate. This supervision factorizes the probability distribution over all possible predictions of the next token given any combination of previous tokens, ensuring that tokens can be generated in any order from any starting point (29-31).

The process of generating from ESM3 involves iteratively sampling tokens, starting from a sequence of all mask tokens. The model is trained to predict the identity of masked tokens, and the mask is sampled from a noise schedule to ensure that the model sees many different combinations of masked sequence, structure, and function. This differs from classical masked language modeling in that the supervision is applied across all possible masking rates, allowing for the prediction of completions of any combination of modalities from any other. The supervision factorizes the probability distribution over all possible predictions of the next token given any combination of previous tokens, ensuring that tokens can be generated in any order from any starting point. The masking is applied independently to sequence, structure, and function tracks, enabling generation from any combination of empty, partial, or complete inputs. The training objective is effective for representation learning, and a noise schedule is chosen that balances generative capabilities with representation learning.

Tokenization is a process that allows for efficient reasoning over structure. In the context of protein structures, tokenization involves compressing the high dimensional space of three-dimensional structure into discrete tokens. This is achieved through the use of a discrete auto-encoder, which is trained to compress the structure into tokens.

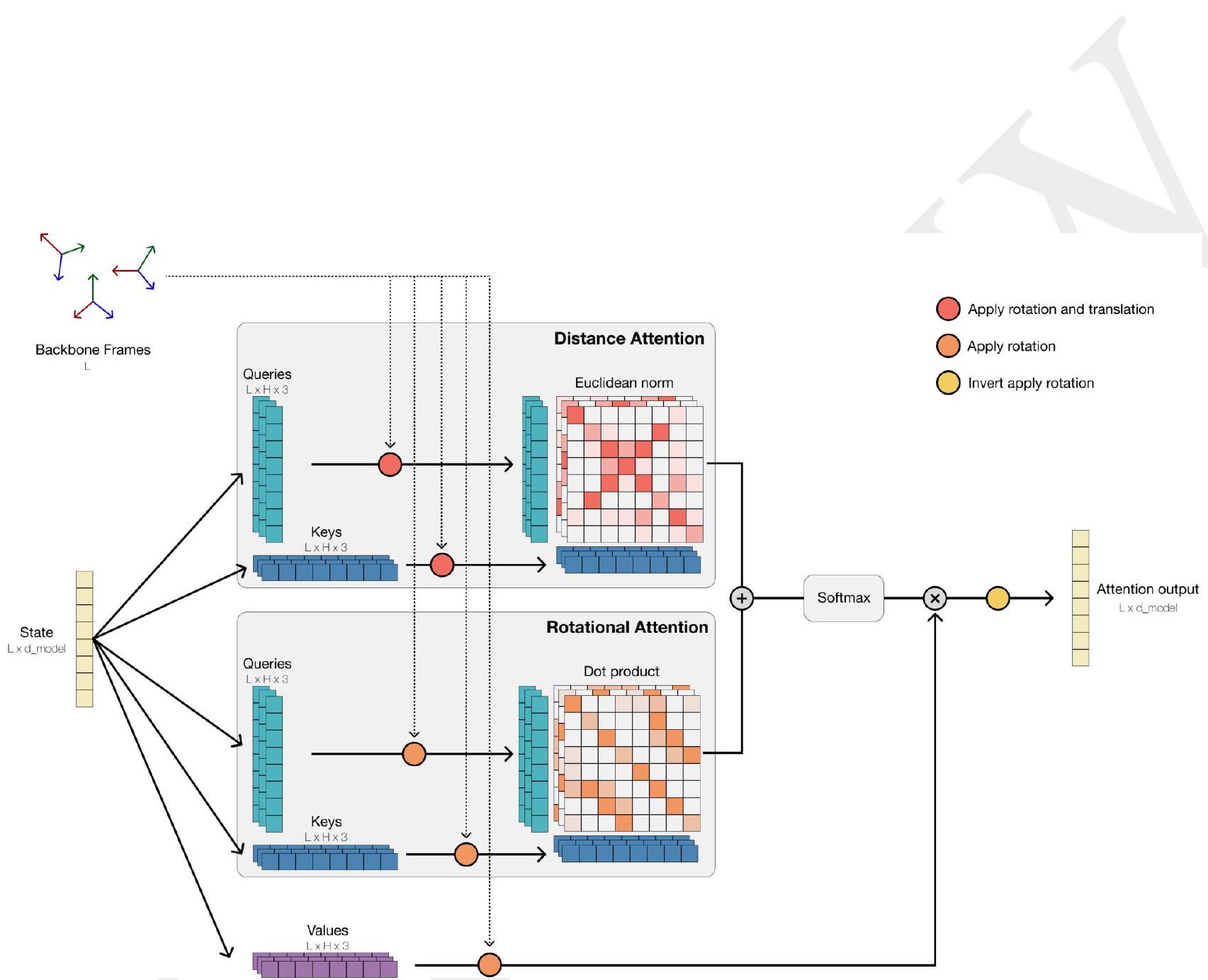

To efficiently process three-dimensional structure, an invariant geometric attention mechanism is proposed. This mechanism operates in local reference frames defined by the bond geometry at each amino acid, and allows local frames to interact globally through a transformation into the global frame. This mechanism can be efficiently realized through the same computational primitives as attention, and is readily scalable.

The local structural neighborhoods around each amino acid are encoded into a sequence of discrete tokens, one for each amino acid. This allows for efficient reasoning over the structure of proteins, which can be useful in a variety of applications, such as drug discovery and protein engineering.

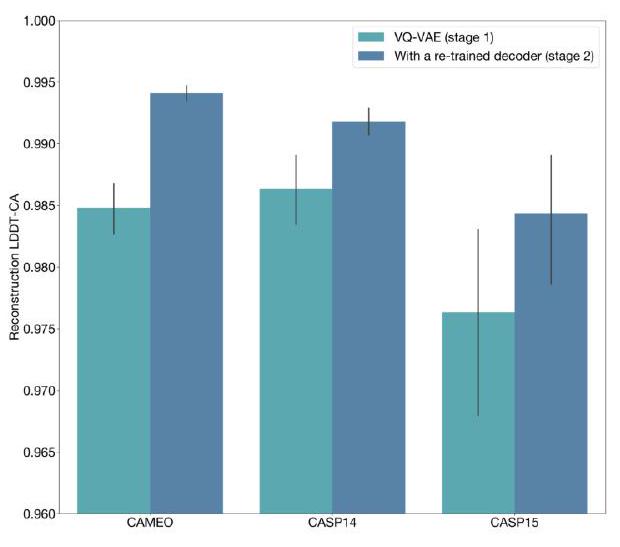

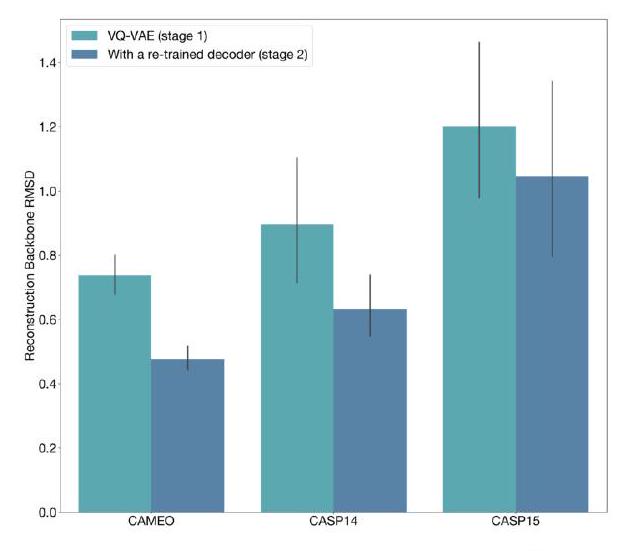

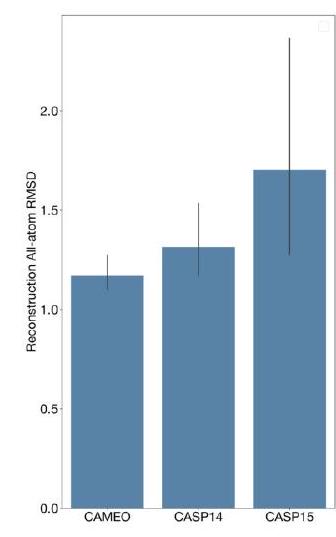

When predicting or generating protein structure, the ESM3 algorithm outputs structure tokens that are then passed to the decoder. The decoder uses these tokens to reconstruct the all-atom structure of the protein. The autoencoder is trained to encode and reconstruct atomic coordinates using a geometric loss that supervises the pairwise distances and relative orientations of bond vectors and normals. This approach allows for near-perfect reconstruction of protein structure, with an RMSD of less than 0.3 Å on CAMEO. As a result, the representation of protein structure can be done with atomic accuracy, both at the input and output levels.

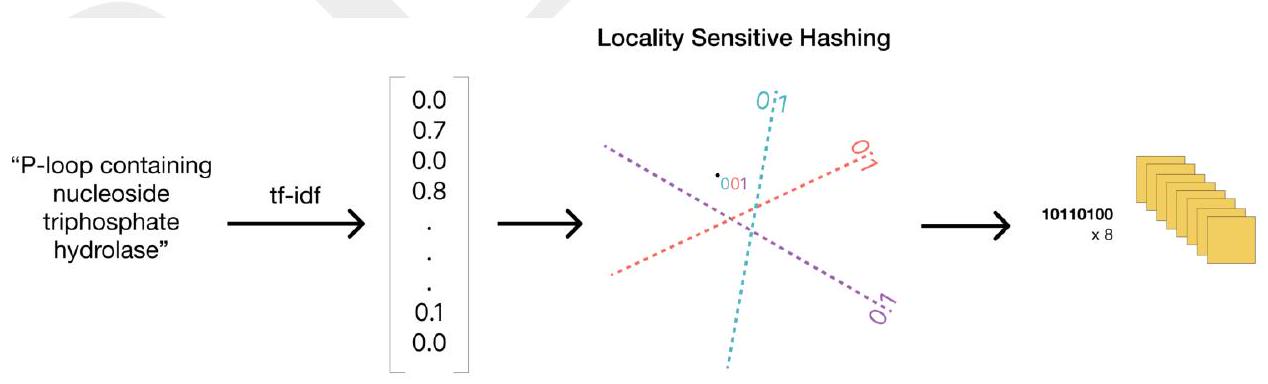

This statement is discussing the use of a language model called ESM3, which is designed to assist with protein structure prediction. The statement explains that ESM3 can be given direct access to atomic coordinates through a geometric attention projection, which improves its ability to respond to prompts related to atomic coordinates. Additionally, ESM3 can be conditioned on either or both of tokenized structure and atomic coordinates, and is supplemented with coarse-grained tokens that encode secondary structure state and solvent accessible surface area. Finally, the statement notes that function is presented to the model in the form of tokenized keyword sets for each position in the sequence.

transformer encoder and decoder. The resulting model can be used for a variety of tasks, including protein classification, structure prediction, and function prediction. The bidirectional nature of the transformer allows for efficient processing of both forward and backward sequences, which is particularly useful for protein sequences where the order of amino acids is important. Overall, ESM3 is a powerful tool for protein modeling and prediction.

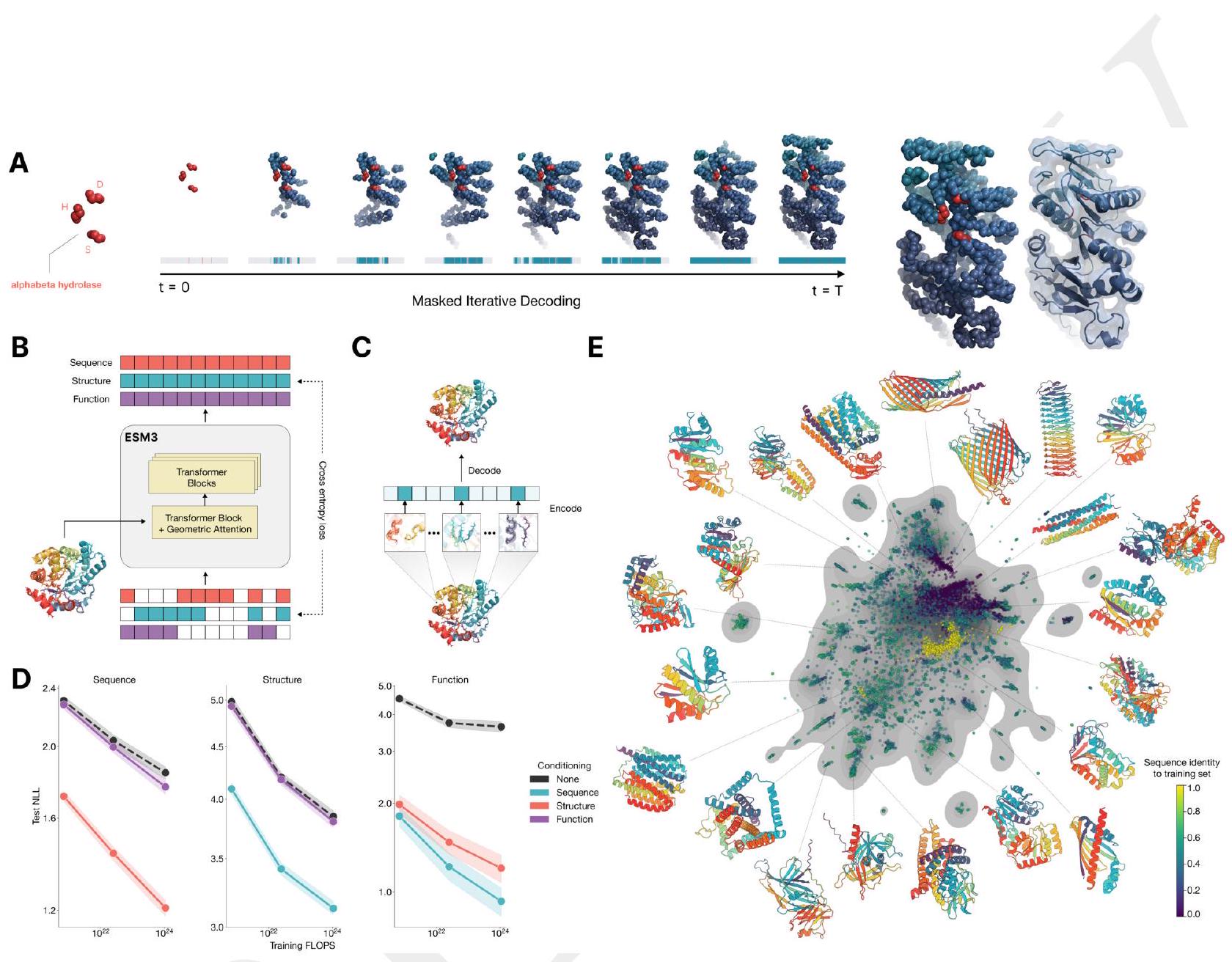

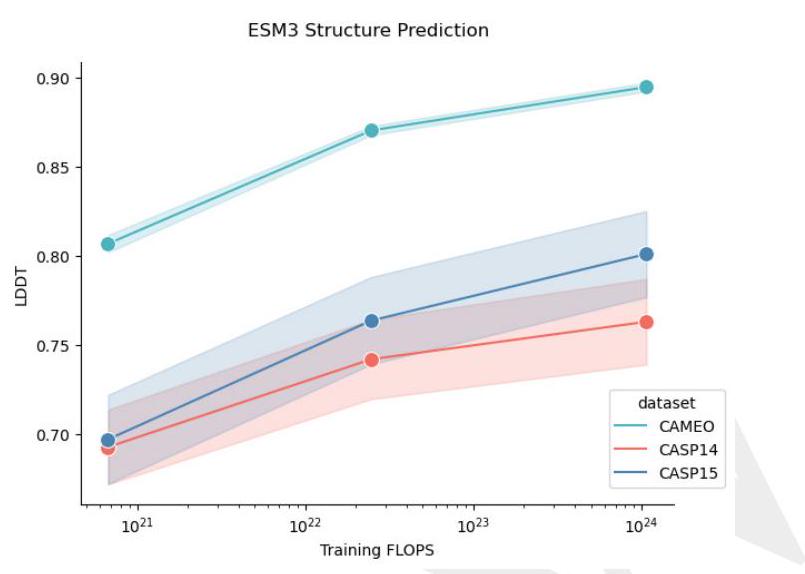

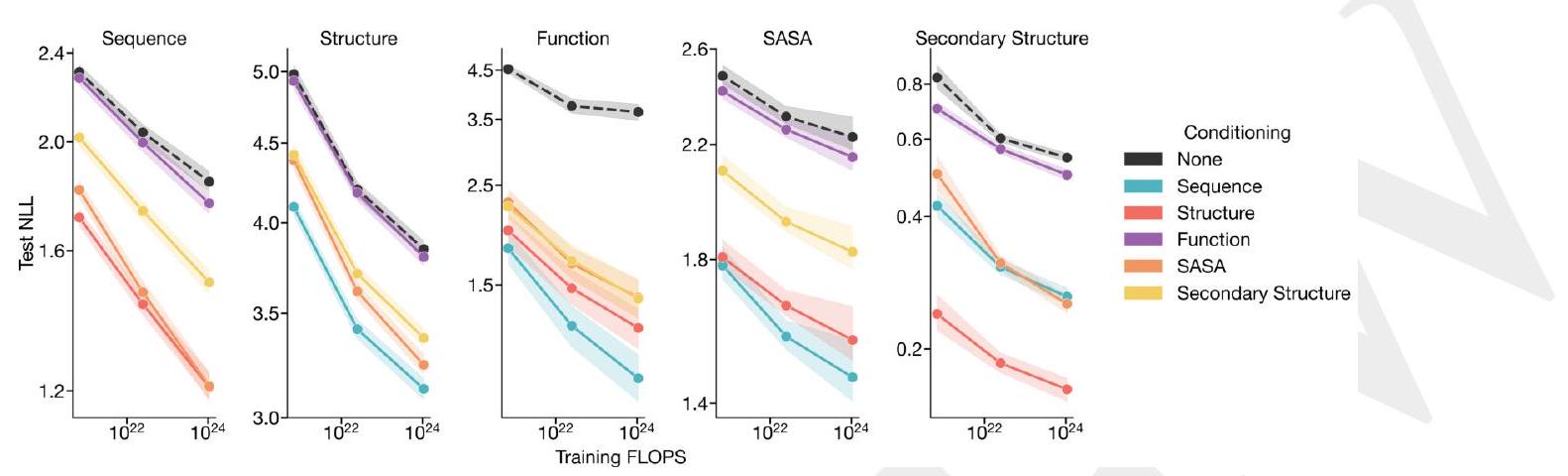

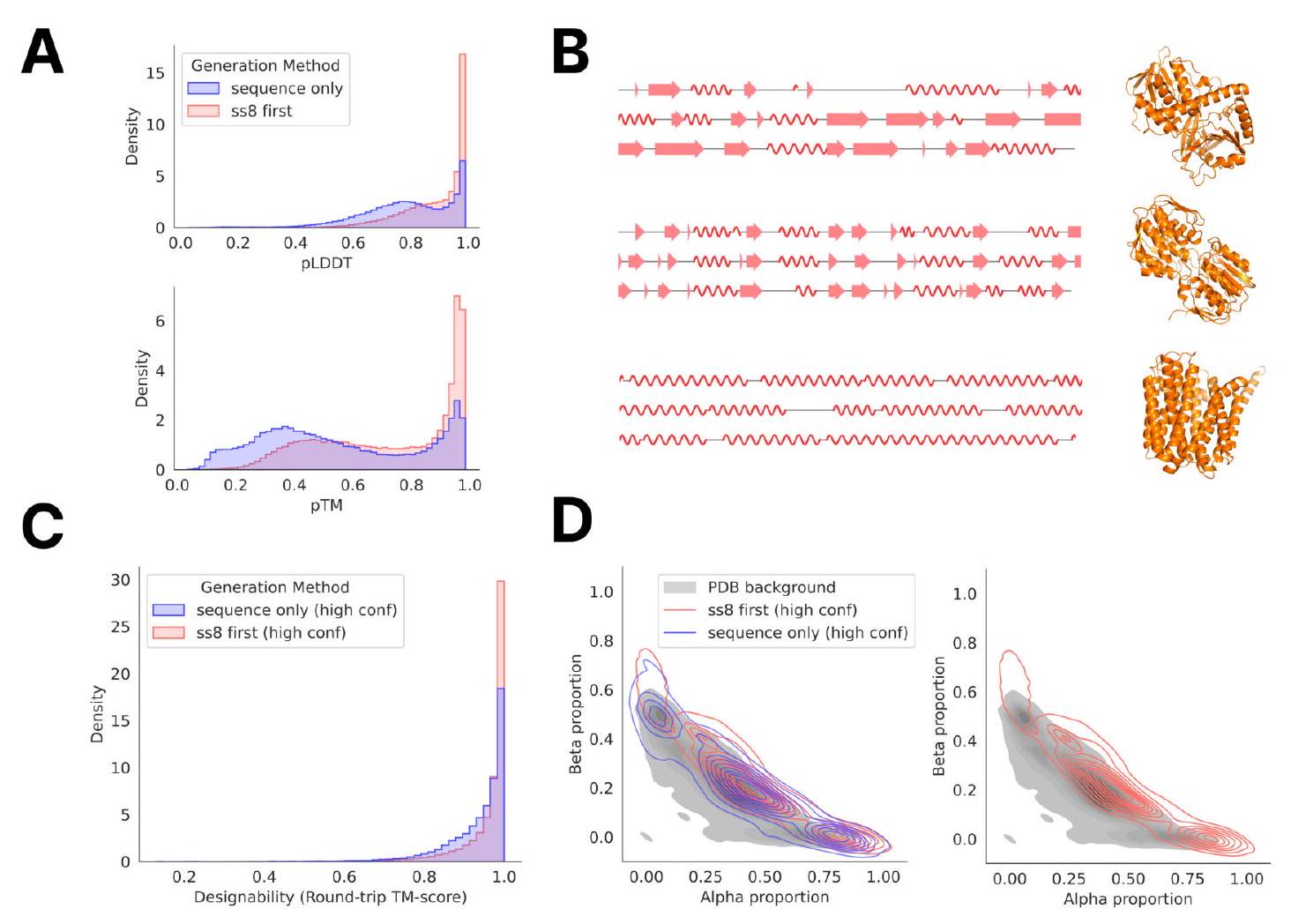

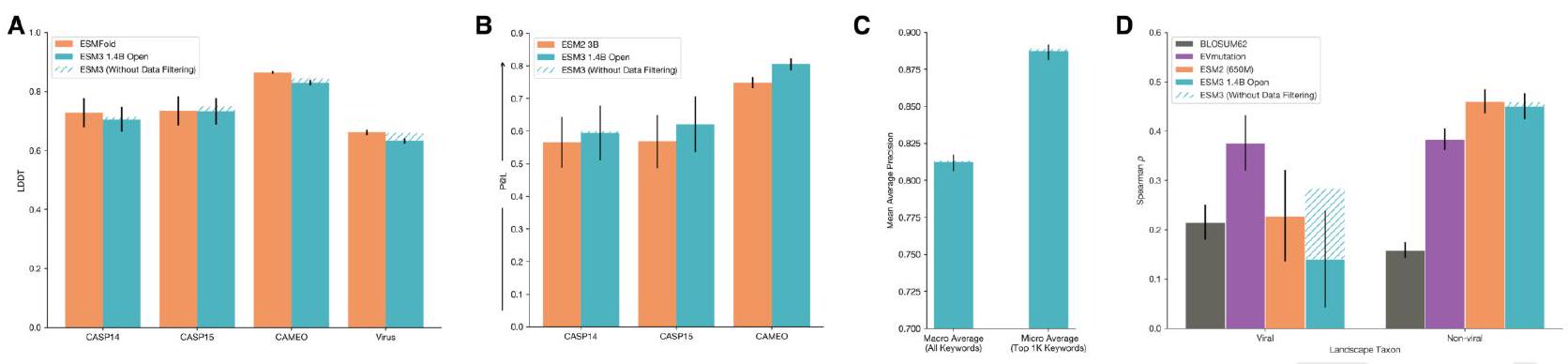

Figure 1. ESM3 is a generative language model that reasons over the sequence, structure, and function of proteins. (A) Iterative sampling with ESM3. Sequence, structure, and function can all be used to prompt the model. At each timestep $\mathrm{t}$, a fraction of the masked positions are sampled until all positions are unmasked. (B) ESM3 architecture. Sequence, structure, and function are represented as tracks of discrete tokens at the input and output. The model is a series of transformer blocks, where all tracks are fused within a single latent space; geometric attention in the first block allows conditioning on atomic coordinates. ESM3 is supervised to predict masked tokens. (C) Structure tokenization. Local atomic structure around each amino acid is encoded into tokens. (D) Models are trained at three scales: 1.4B, 7B, and 98B parameters. Negative log likelihood on test set as a function of training FLOPs shows response to conditioning on each of the input tracks, improving with increasing FLOPs. (E) Unconditional generations from ESM3 98B (colored by sequence identity to the nearest sequence in the training set), embedded by ESM3, and projected by UMAP alongside randomly sampled sequences from UniProt (in gray). Generations are diverse, high quality, and cover the distribution of natural sequences.

ESM3 is a generative language model that can reason over the sequence, structure, and function of proteins. It uses iterative sampling to prompt the model with sequence, structure, and function information. The model is represented as tracks of discrete tokens at the input and output, and it is a series of transformer blocks that fuse all tracks within a single latent space. The model is supervised to predict masked tokens and is trained at three scales: 1.4B, 7B, and 98B parameters. The model can generate diverse, high-quality sequences that cover the distribution of natural sequences. The structure of the protein is tokenized and encoded into tokens, and the model includes a geometric attention layer for atomic structure coordinate conditioning. The final layer representation is projected into token probabilities for each of the tracks using shallow MLP heads.

The ESM3 model is a machine learning model that has been trained on a vast amount of data, including 2.78 billion natural proteins derived from sequence and structure databases. However, since only a small fraction of structures have been experimentally determined, the model also leverages predicted structures and generates synthetic sequences with an inverse folding model. Additionally, function keywords are predicted from sequence using a library of hidden markov models. This results in a total of 3.15 billion protein sequences, 236 million protein structures, and 539 million proteins with function annotations, totaling 771 billion unique tokens. The full details of the training dataset are described in Appendix A.2.1.8.

The researchers trained ESM3 models at three different scales, with varying numbers of parameters. They conducted experiments to evaluate the performance of representation learning in response to different architecture hyperparameters, and found that increasing depth had a greater impact than increasing width. Based on these findings, they chose to use relatively deep networks for their final architectures, with the largest model incorporating 216 Transformer blocks. The details of these experiments can be found in Appendix A.1.5.

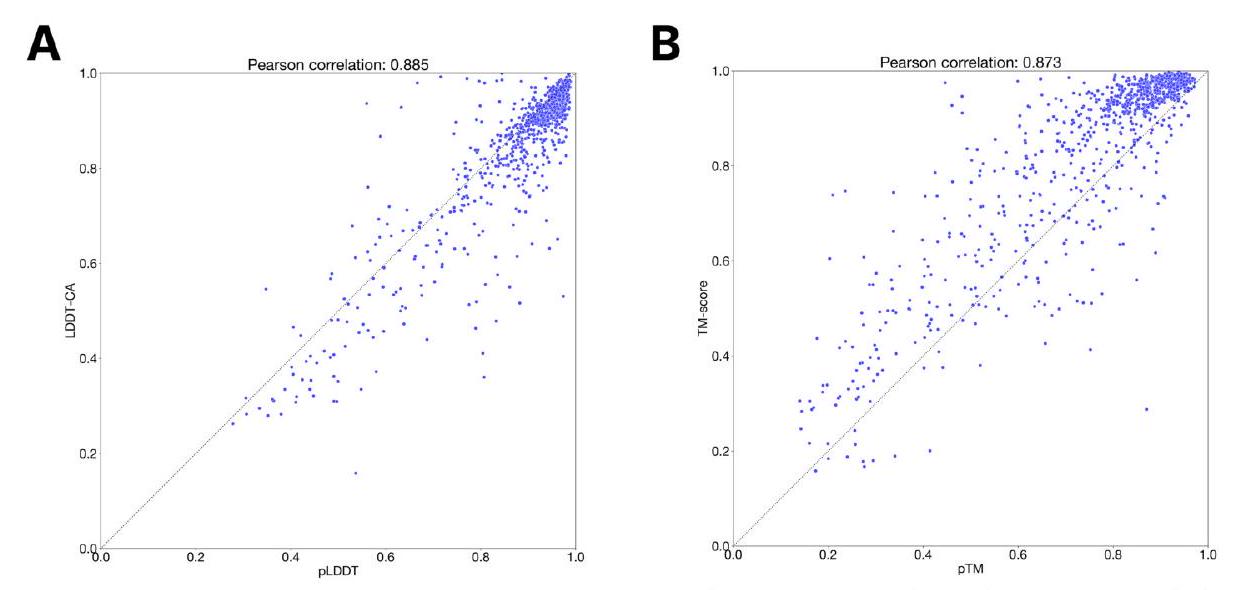

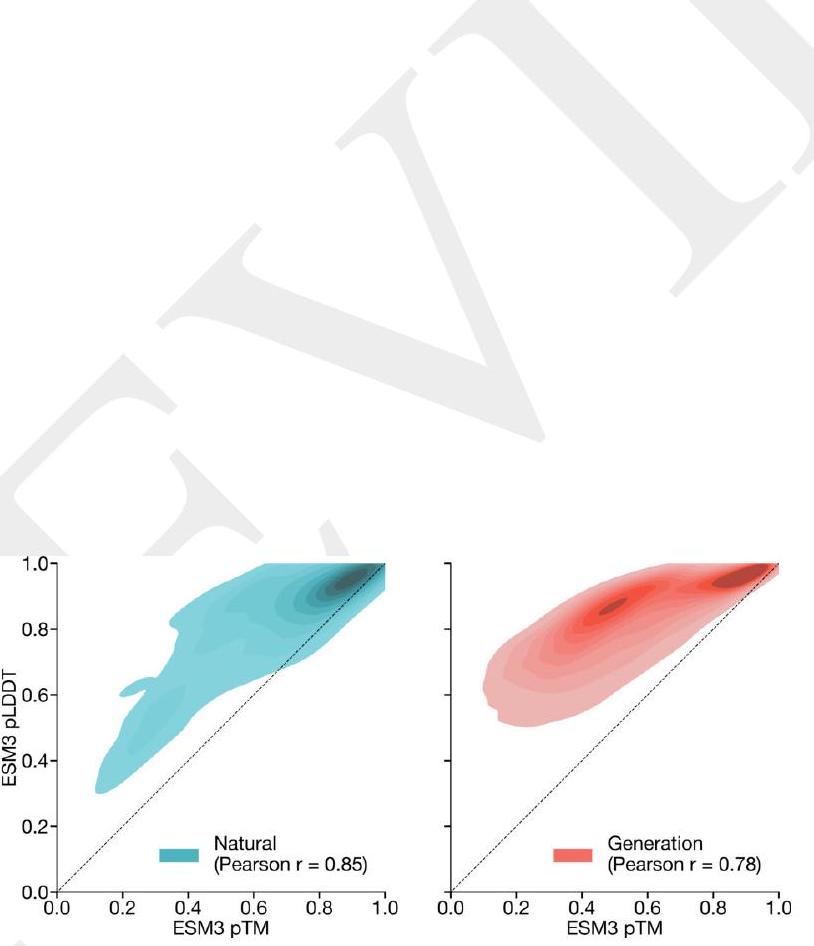

Scaling ESM3 from 1.4 billion to 98 billion parameters has led to significant improvements in the validation loss for all tracks, with the most notable improvements observed in sequence loss. These improvements have resulted in better representation learning, as demonstrated by the results in Table S7 and Fig. S8. In single sequence structure prediction on CAMEO, ESM3 98B outperformed ESMFold with a mean local distance difference test (LDDT) of 0.895. Additionally, unconditional generation produced high-quality proteins with a mean predicted LDDT of 0.84 and predicted template modeling score (pTM) of 0.52, which were diverse in both sequence and structure, spanning the distribution of known proteins. These findings are illustrated in Fig. 1E and Fig. S13.

Programmable design with ESM3 refers to the use of the Enterprise Systems Management Model (ESM3) to create a flexible and adaptable design for enterprise systems. ESM3 is a framework that provides guidelines and best practices for managing and optimizing enterprise systems, including IT infrastructure, applications, and services.

By using ESM3, organizations can design their systems to be more programmable, meaning that they can be easily modified and customized to meet changing business needs. This can be achieved through the use of automation, orchestration, and other tools that enable rapid and efficient system configuration and management.

Programmable design with ESM3 can help organizations to improve their agility, reduce costs, and enhance their ability to respond to changing market conditions. It can also help to improve the overall performance and reliability of enterprise systems, by enabling more proactive monitoring and management of system resources.

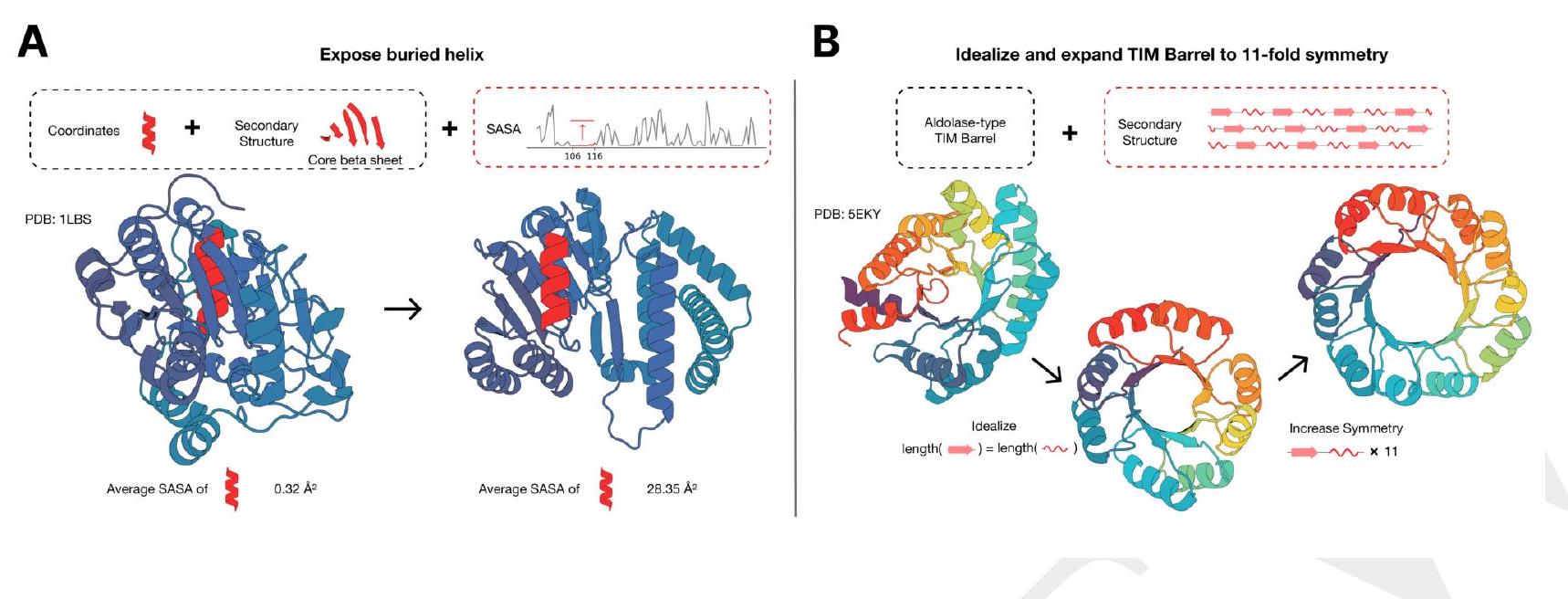

ESM3 is a language model that has the ability to follow complex prompts with different compositions. It can be prompted with instructions from each of its input tracks, which include sequence, structure coordinates, secondary structure (SS8), solvent-accessible surface area (SASA), and function keywords. This allows prompts to be specified at multiple levels of abstraction, ranging from atomic level structure to high level keywords describing the function and fold topology.

The language model uses a learned generative model to find a coherent solution that respects the prompt. This means that ESM3 can generate a response that is consistent with the input prompt, even if the prompt is complex and includes multiple levels of abstraction.

Overall, ESM3 is a powerful tool for generating responses to complex prompts that involve different levels of abstraction. It can be used by experts in various fields to generate coherent and accurate responses to complex prompts.

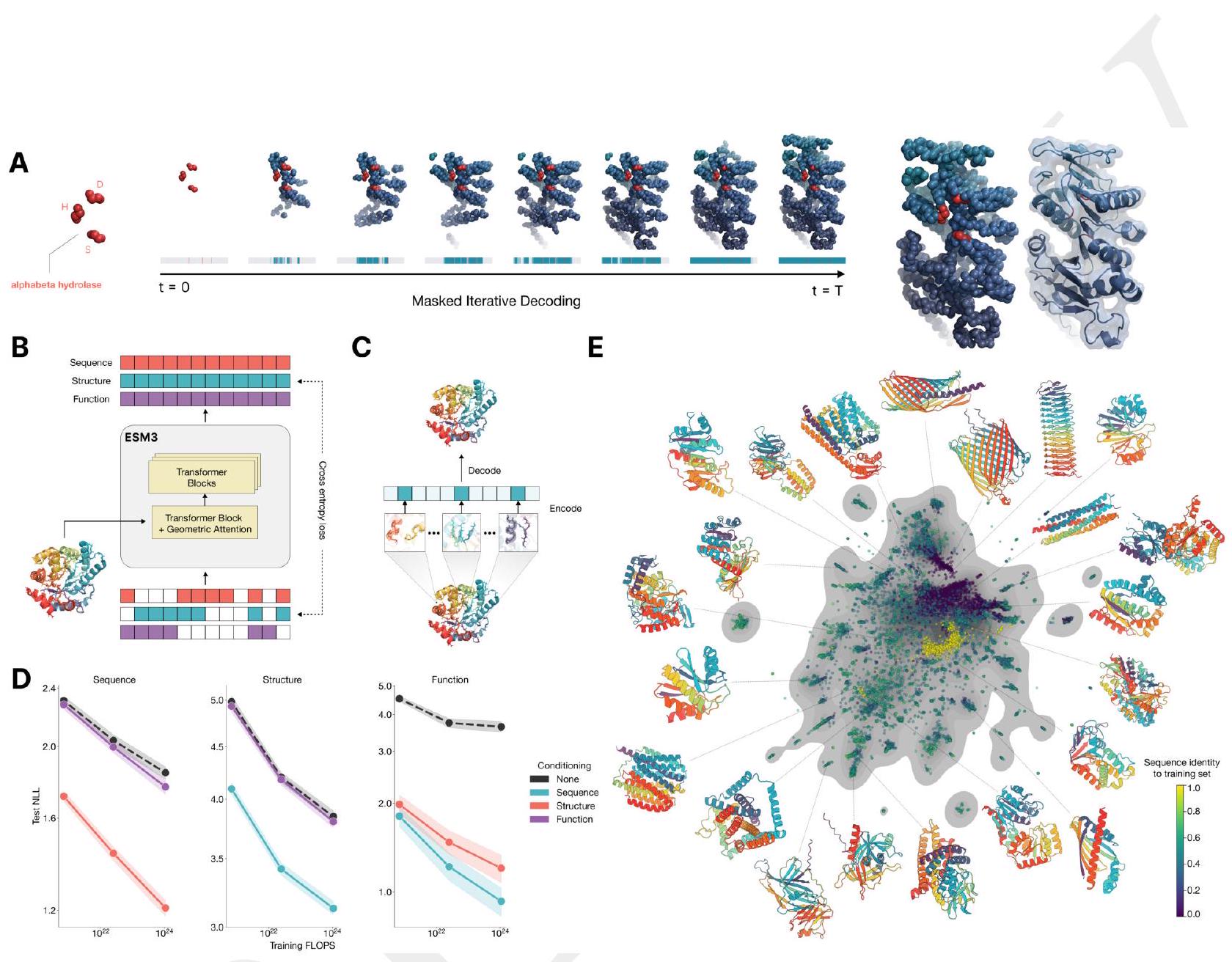

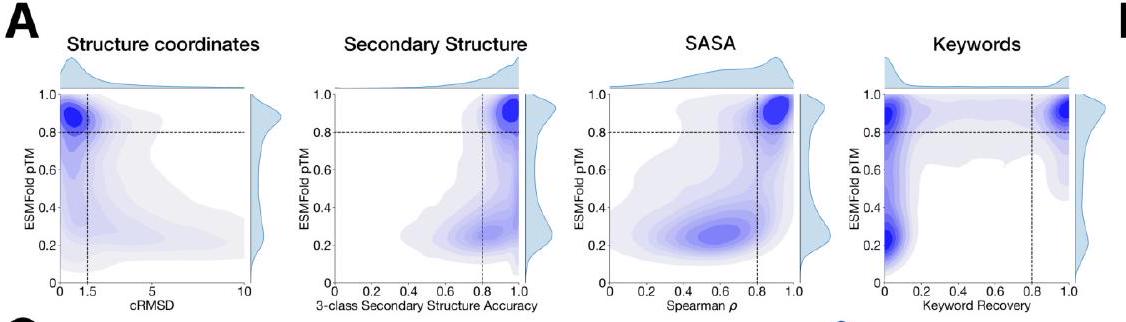

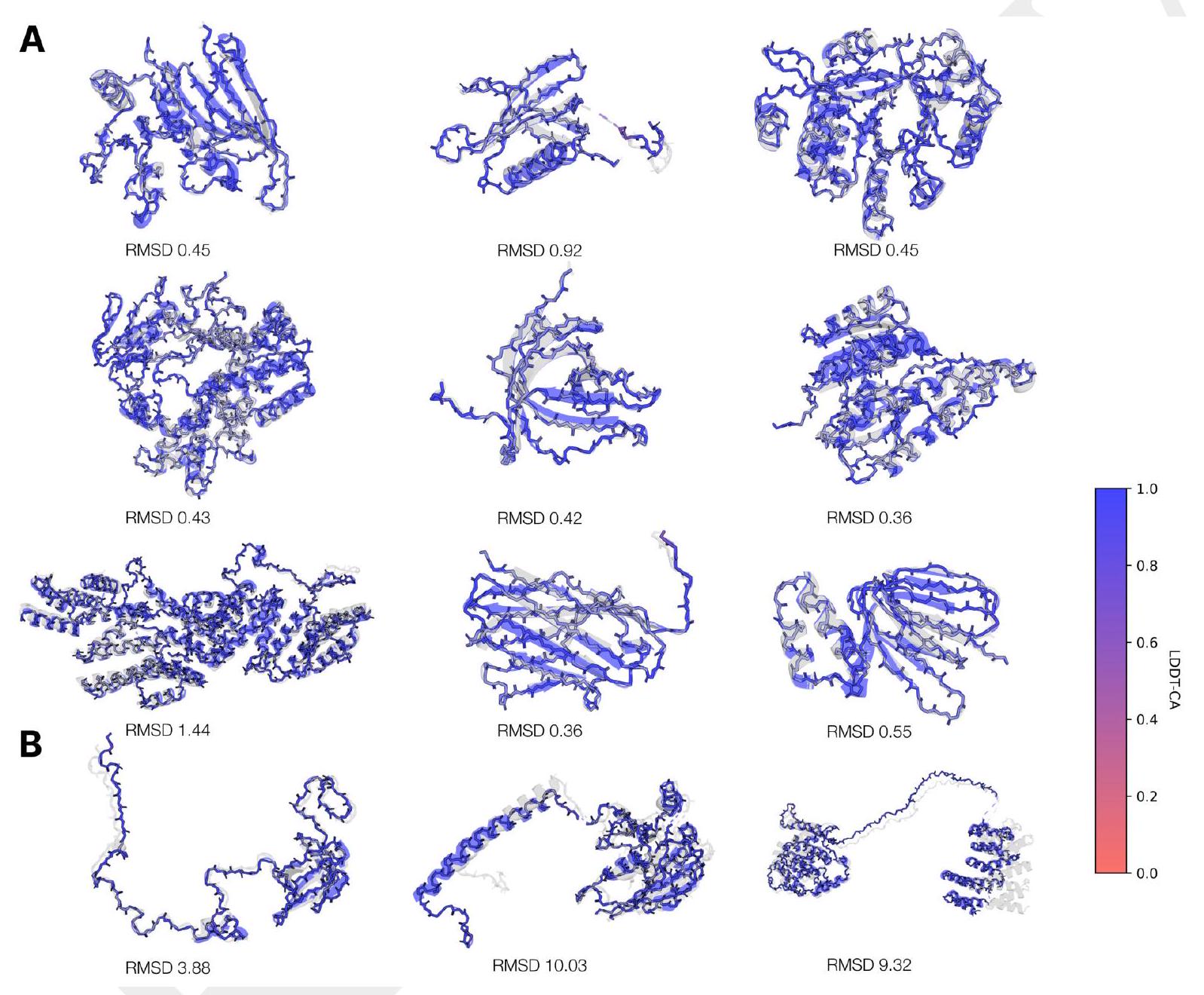

The evaluation of ESM3's ability to follow prompts in each of the tracks independently involves constructing a set of prompts for each track using a temporally held out test set of natural proteins. The resulting generations are then evaluated for consistency with the prompt and foldability, as well as the confidence of the structure prediction TM-score under ESMFold. Consistency metrics are defined for each track, including constrained site RMSD, SS3 accuracy, SASA spearman $\rho$, and keyword recovery. The evaluation shows that ESM3 is able to find solutions that follow the prompt and have confidently predicted structures by ESMFold, with pTM $>0.8$ across all tracks.

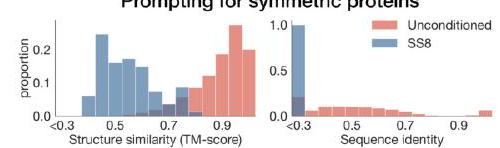

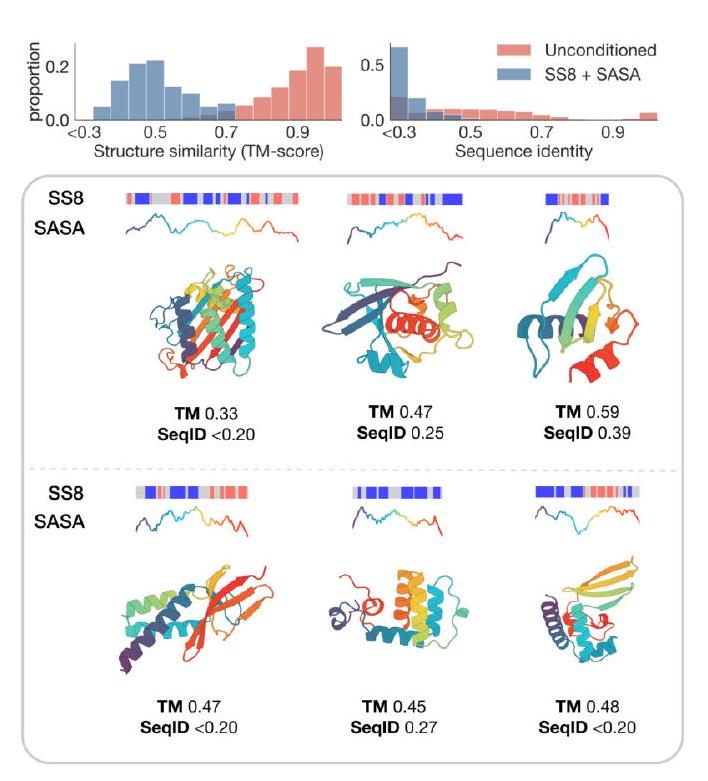

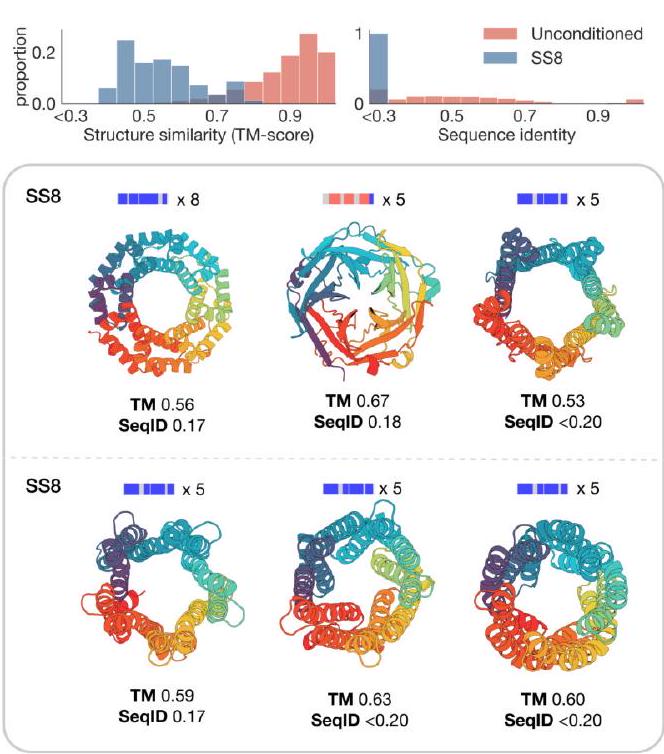

The study aimed to determine if the ESM3 model can generate proteins that differ from natural proteins by using prompts that are out-of-distribution. The researchers constructed a set of prompts combining SS8 and SASA from held-out structures and found that the model can generate coherent globular structures with a mean pTM of 0.85 ± 0.03. However, the distribution of similarities to the training set shifted to be more novel, with an average sequence identity to the nearest training set protein of less than 20% and a mean TM-score of 0.48 ± 0.09. To test the ability to generalize to structures beyond the distribution of natural proteins, the researchers used secondary structure prompts derived from a dataset of artificial symmetric protein designs distinct from the natural proteins found in the training dataset. The results showed that ESM3 can produce high confidence generations with low sequence and structure similarity to proteins in the training set, indicating that the model can generate protein sequences and structures highly distinct from those that exist in nature.

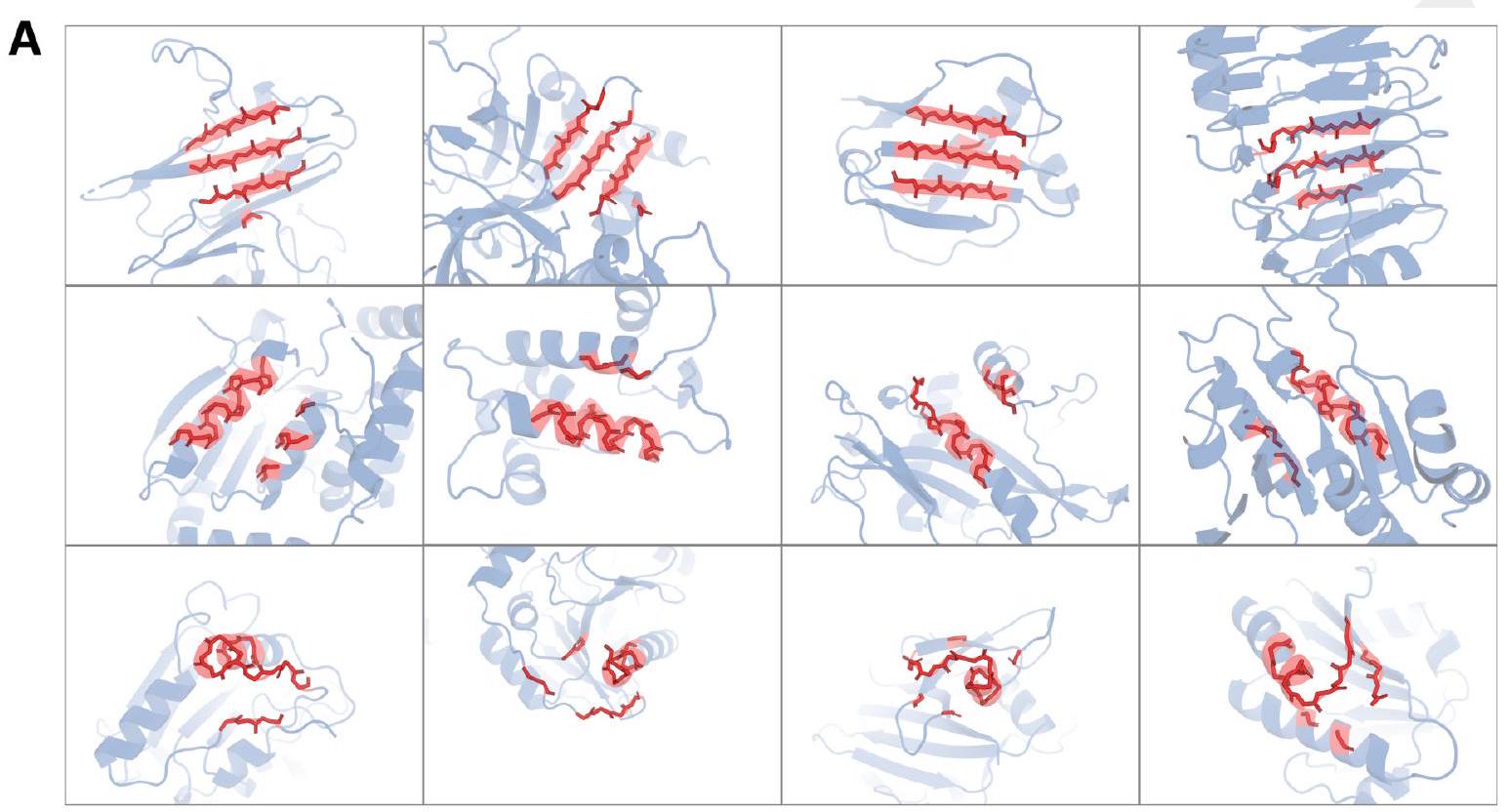

ESM3 is a model that has the capability to understand and respond to complex instructions. It can also create prompts from various sources and at different levels of complexity. To assess its abilities, we provide ESM3 with motifs that require it to determine the spatial arrangement of individual atoms, including those that involve coordination between residues that are distant from each other in the sequence, such as catalytic centers and ligand binding sites.

A

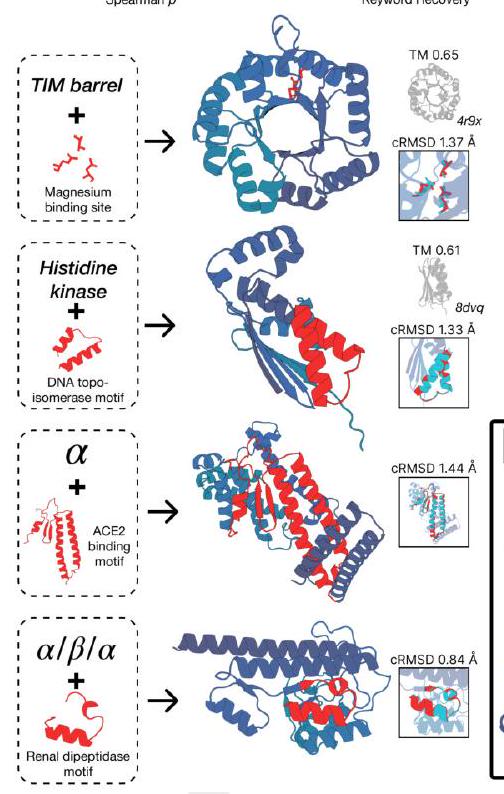

Brompting for out-of-distribution folds

Figure 2 shows the capabilities of ESM3, a generative programming tool. In (A), ESM3 can follow prompts from each of its input tracks and generate consistent and foldable proteins. In (B), ESM3 can be prompted to generate proteins that differ in structure and sequence from natural proteins, shifting towards a more novel space. In (C), ESM3 generates creative solutions to complex prompts, such as compositions of atomic level motifs with high level instructions specified through keyword or secondary structure. The fidelity to the prompt is shown via similarity to reference structure or all-atom RMSD to the prompted structure. In (D), ESM3 shows especially creative behavior by compressing a serine protease by 33% while maintaining the active site structure.

This statement is describing a process for generating protein structures using a combination of motifs and scaffolds. The goal is to create unique combinations that satisfy certain criteria, such as having a low coordinate root mean square deviation (cRMSD) and a high level of accuracy for secondary structure predictions. The process involves generating samples until the desired criteria are met with a high level of confidence. The pTM and pLDDT values are measures of the confidence level in the generated structures.

ESM3 is a tool that can solve a variety of tasks related to protein motifs without relying on the original scaffold. It does this by using a combination of existing protein structures and novel scaffolds. In some cases, the scaffolds are transferred from existing proteins with similar motifs, while in other cases, entirely new scaffolds are created. The generated solutions have high designability, meaning they can be confidently recovered using inverse folding with ESMFold. Overall, ESM3 is a powerful tool for protein motif design and analysis.

In this experiment, the researchers used prompt engineering to generate creative responses to prompts. They started with a natural trypsin protein (PDB $1 \mathrm{Y} 3 \mathrm{~V}$ ) and reduced the overall generation length by a third (from 223 to 150 residues). They then prompted with the sequence and coordinates of the catalytic triad as well as functional keywords describing trypsin.

The researchers used ESM3 to maintain the coordination of the active site and the overall fold with high designability, despite the significant reduction in sequence length and the fold only being specified by the function keyword prompt. The results showed that the coordination of the active site was maintained with a cRMSD of $0.73 \AA$, and the overall fold had high designability with a pTM of 0.84 and a scTM mean of 0.97 and std of 0.006.

Overall, this experiment demonstrates the potential of prompt engineering to generate creative responses to prompts and the ability of ESM3 to maintain the coordination of the active site and the overall fold with high designability, even with a significant reduction in sequence length.

ESM3 is a protein design tool that can generate creative solutions to prompts specified in any of its input tracks, either individually or in combination. This means that it can provide control at various levels of abstraction, from high-level topology to atomic coordinates, using a generative model to bridge the gap between the prompt and biological complexity. Essentially, ESM3 allows for a rational approach to protein design by enabling users to specify their desired outcomes and then generating potential solutions that meet those criteria. This can be particularly useful for researchers who are looking to design proteins with specific properties or functions, as it allows them to explore a wide range of possibilities and identify the most promising candidates for further study.

As an expert in the field, you may be interested in the potential for larger models to have even greater latent capabilities that are not yet fully observed. While we have seen significant improvements in performance with the base models, there may be untapped potential for larger models to excel in certain tasks.

For example, the base ESM3 models have shown the ability to perform complex tasks such as atomic coordination and composition of prompts, despite not being specifically optimized for these objectives. Additionally, the properties we evaluate generative outputs on, such as high pTM, low cRMSD, and adherence to multimodal prompting, are only indirectly observed by the model during pre-training.

By aligning the model directly to these tasks through finetuning, we may be able to unlock even greater capabilities with larger models. This could lead to significant advancements in the field and potentially revolutionize the way we approach certain tasks.

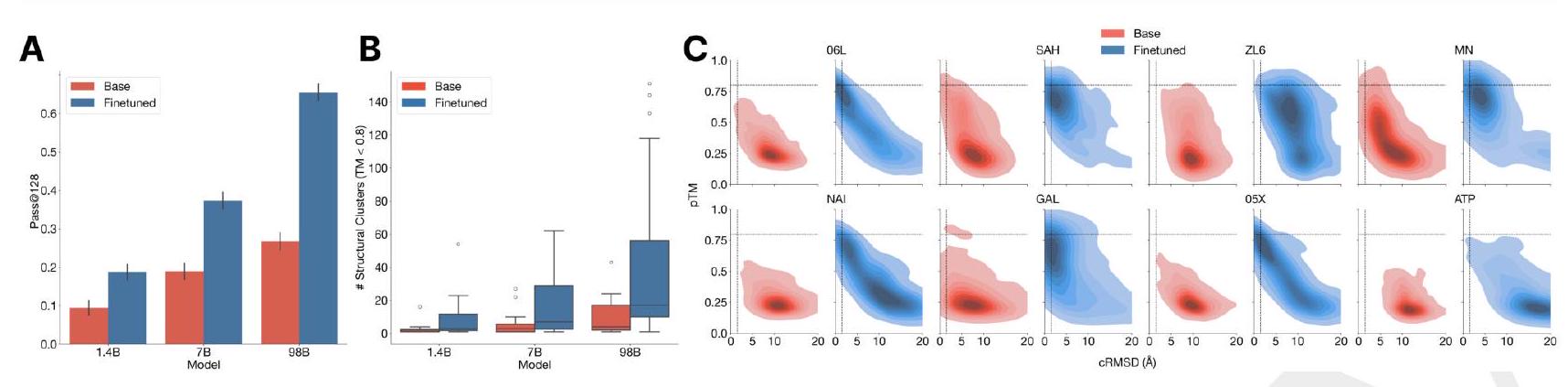

This passage describes a process for aligning base models to generate proteins that meet specific requirements. The process involves constructing a dataset of partial structure prompts, generating multiple protein sequences for each prompt, and then using ESM3 to fold and score each sequence based on consistency with the prompt and foldability. High quality samples are paired with low quality samples for the same prompt to create a preference dataset, which is used to tune ESM3 to optimize a preference tuning loss. This tuning incentivizes the model to prioritize high quality samples over low quality samples.

To evaluate the performance of the ESM3 1.4B, 7B, and 98B base models, we first align them. We then measure their absolute performance and the shift in the distribution of generations. To determine the consistency of a generation with a prompt, we fold the generated sequence and use structural metrics (backbone cRMSD $<1.5 \AA$ ) and foldability (pTM $>0.8$ ) to measure success. To ensure that the model used for evaluation is independent of the one used for creating the preference dataset, we conduct these evaluations using ESMFold.

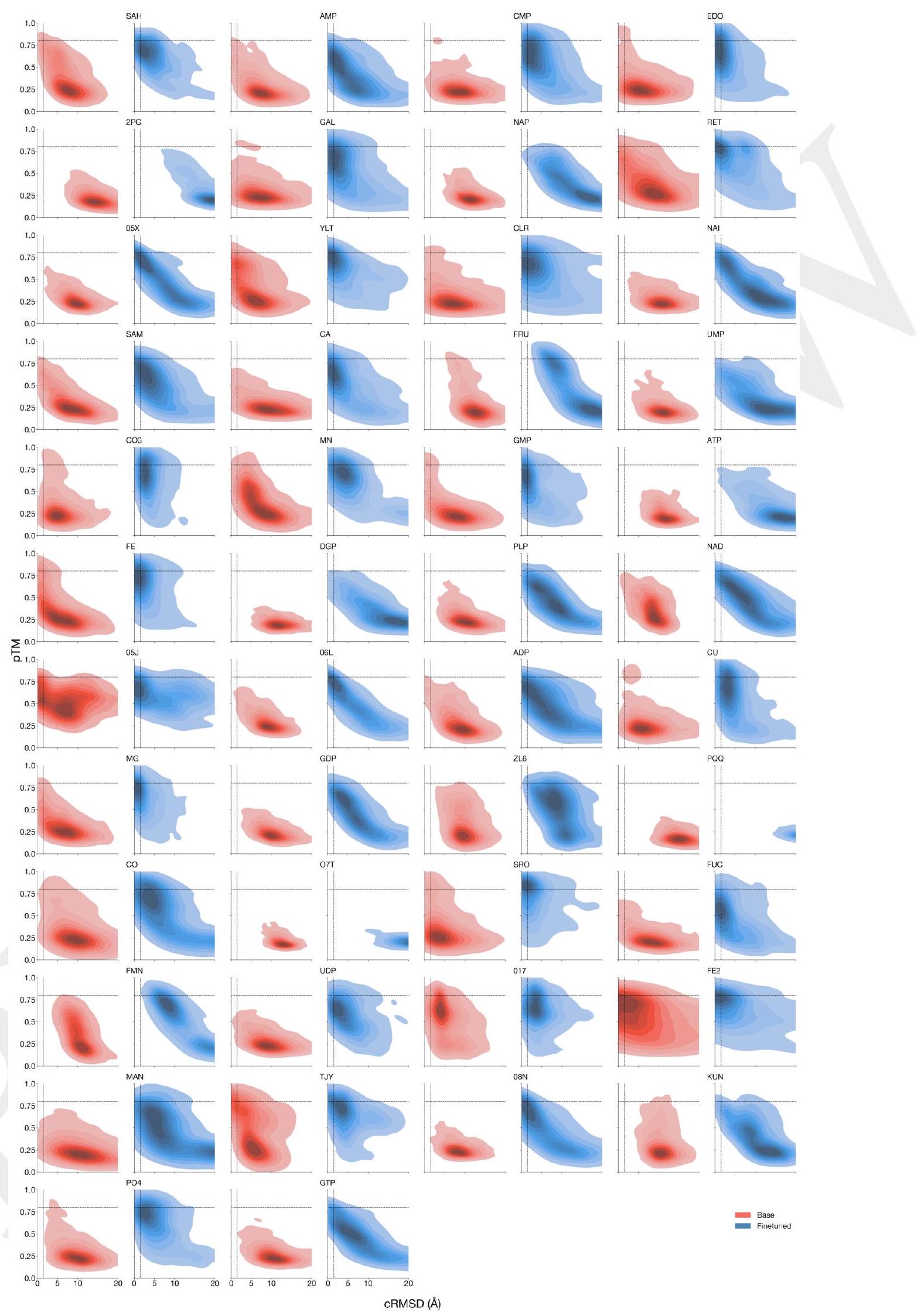

We are testing the effectiveness of the ESM3 model in generating high-quality scaffolds using complex tertiary motif scaffolding prompts. To do this, we use a dataset of 46 ligand binding motifs from a set of proteins that have been held out temporarily. We create 1024 prompts for each motif task by randomly changing the order of the residues, their position in the sequence, and the length of the sequence. We then generate a single protein for each prompt. We evaluate the success of the model by calculating the percentage of tasks solved (backbone cRMSD <1.5 Å, pTM >0.8) after 128 generations. The results of this evaluation can be found in Appendix A.4.5.

ment to the PDB (Fig. 3B). The fraction of residues in common with the PDB is $0.5 \%$ for 1.4B, $1.7 \%$ for 7B, and $3.1 \%$ for 98B. The preference tuned models show a much larger fraction of residues in common with the PDB $(17.1 \%$ for 1.4B, $28.1 \%$ for 7B, $41.1 \%$ for 98B; Fig. 3B). The preference tuned models also show a much larger fraction of residues in common with the PDB for the subset of the PDB that is of comparable difficulty to the CASP9 targets (Fig. 3B).

In summary, the preference tuned models are able to solve double the number of atomic coordination tasks compared to the base models, and they also show a much larger capability difference when aligned to the PDB. This suggests that the preference tuned models are more accurate and reliable in predicting protein structures.

Figure 3 shows the results of an experiment where a model was trained to solve complex tasks related to protein structure prediction. The model was trained using a dataset of preference pairs, where positive samples with good scores for desired properties were paired with negative samples with worse scores. The model was then evaluated by prompting it with coordinates in tertiary contact.

The results show that after training, the model's ability to solve complex tasks increases with scale through alignment. This means that as the model becomes larger, it becomes better at solving complex tasks. The response to alignment shows a latent capability to solve complex tasks in the largest model.

Additionally, after finetuning, the model was able to generate a number of unique structures for ligands for which there were successes. The densities of prompted generations also tended to improve meaningfully after alignment, indicating that the fidelity to the prompt and quality of generations improved.

Overall, the results suggest that alignment can significantly improve the performance of models in solving complex tasks related to protein structure prediction.

The text discusses the performance of preference-tuned models in solving tasks and finding solutions. The models are evaluated based on the number of distinct structural clusters with a certain level of accuracy. The results show that preference-tuned models not only solve a greater proportion of tasks but also find a greater number of solutions per task. The distribution of ESMFold pTM and backbone cRMSD for each ligand binding motif is observed to shift. The finetuned model produces more distinct successful clusters than the base model on 37 of the 46 tested ligands. The preference tuning leads to larger improvements at all scales compared to a supervised finetuning baseline.

Certainly! Generating a new fluorescent protein involves several steps, including gene synthesis, protein expression, and characterization of the protein's properties.

Gene synthesis: The first step is to design and synthesize a gene that encodes the desired fluorescent protein. This can be done using various techniques, such as PCR-based methods or gene synthesis technologies. The gene should be designed to include the necessary codons for the fluorescent protein's amino acid sequence, as well as any necessary regulatory elements for expression.

Protein expression: Once the gene has been synthesized, it can be cloned into an expression vector and introduced into a host cell for protein expression. The host cell can be a bacterial cell, yeast cell, or mammalian cell, depending on the specific requirements of the fluorescent protein. The protein can be expressed either intracellularly or secreted into the culture medium.

Protein purification: After the protein has been expressed, it needs to be purified from the host cell or culture medium. This can be done using various techniques, such as affinity chromatography or size exclusion chromatography, depending on the properties of the protein.

Protein characterization: Once the protein has been purified, it needs to be characterized to determine its properties, such as its fluorescence spectrum, brightness, and stability. This can be done using various techniques, such as fluorescence spectroscopy or microscopy.

Protein optimization: If the initial protein does not meet the desired specifications, it may need to be optimized through further gene synthesis and protein expression. This can involve modifying the gene sequence to improve the protein's properties, or using different expression systems or purification methods.

The GFP family of proteins is responsible for the fluorescence of jellyfish and the vivid colors of coral. These proteins have a unique ability to form a fluorescent chromophore without the need for cofactors or substrates. This property allows the GFP sequence to be inserted into the genomes of other organisms, which can then be used to visibly label molecules, cellular structures, or processes. This has proven to be a valuable tool in the biosciences, as it allows researchers to track and observe biological processes in real-time.

The GFP family has been extensively studied and modified through protein engineering, but most functional variants have been found in nature. However, rational design and machine learning-assisted high-throughput screening have led to the development of GFP sequences with improved properties, such as higher brightness or stability, or different colors. These modifications typically involve only a few mutations, usually less than 15 out of the total 238 amino acid coding sequence. Studies have shown that random mutations can quickly reduce fluorescence to zero, but in rare cases, scientists have been able to introduce up to 40-50 mutations while retaining GFP fluorescence.

, is essential for the formation of the chromophore. The chromophore is formed through a series of chemical reactions that involve the oxidation and cyclization of the amino acids. The resulting chromophore is a planar heterocyclic structure that is responsible for the fluorescence of GFP.

To generate a new GFP, one would need to understand the complex biochemistry and physics involved in the formation of the chromophore and the structure of the protein. This would require a deep understanding of the chemical reactions involved in the formation of the chromophore, as well as the structural features of the protein that are necessary for the formation of the chromophore.

In addition, one would need to be able to manipulate the amino acid sequence of the protein to create a new GFP with the desired properties. This would require a thorough understanding of protein structure and function, as well as the ability to predict the effects of amino acid substitutions on the structure and function of the protein.

Overall, generating a new GFP would be a complex and challenging task that would require a deep understanding of biochemistry, physics, and protein structure and function.

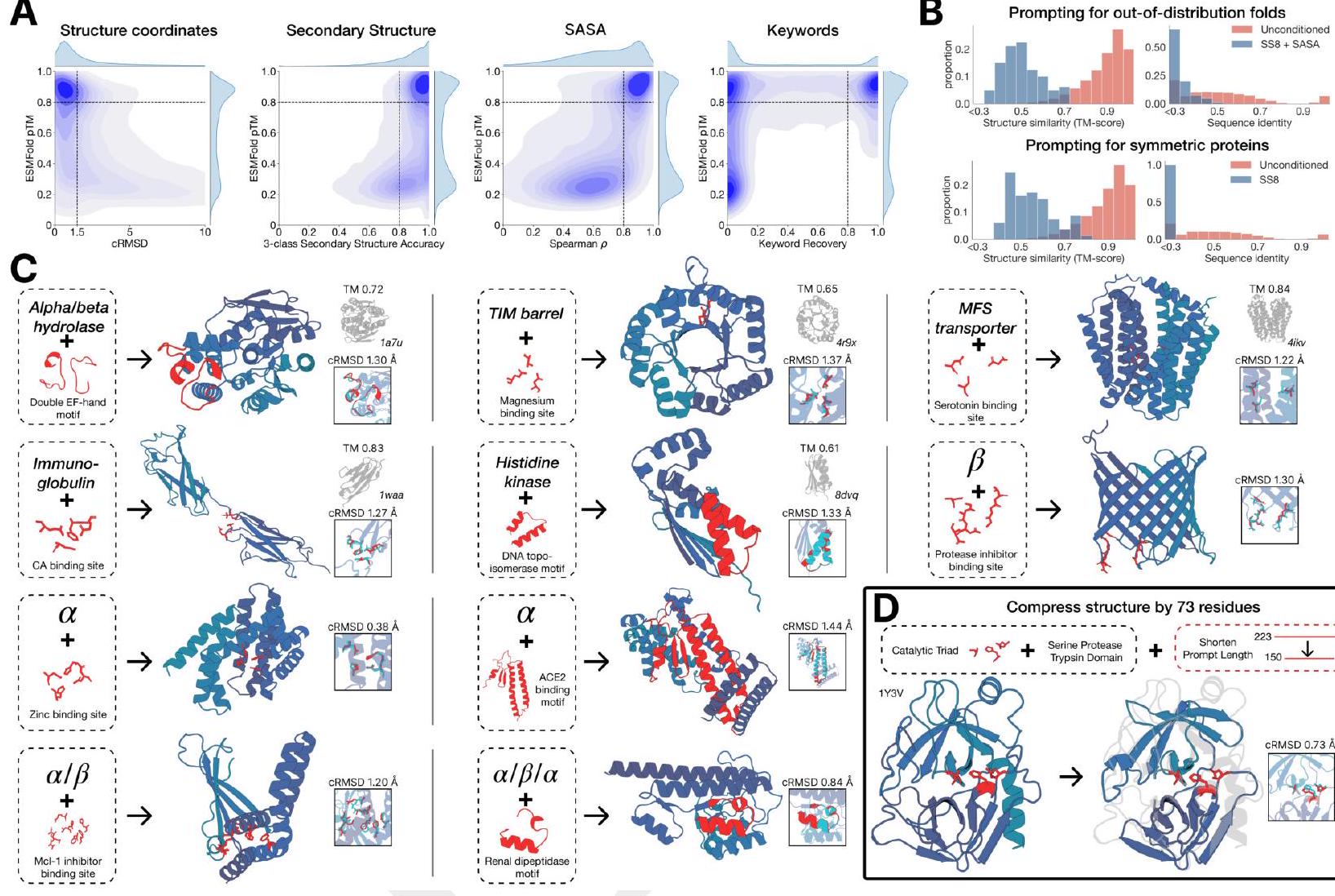

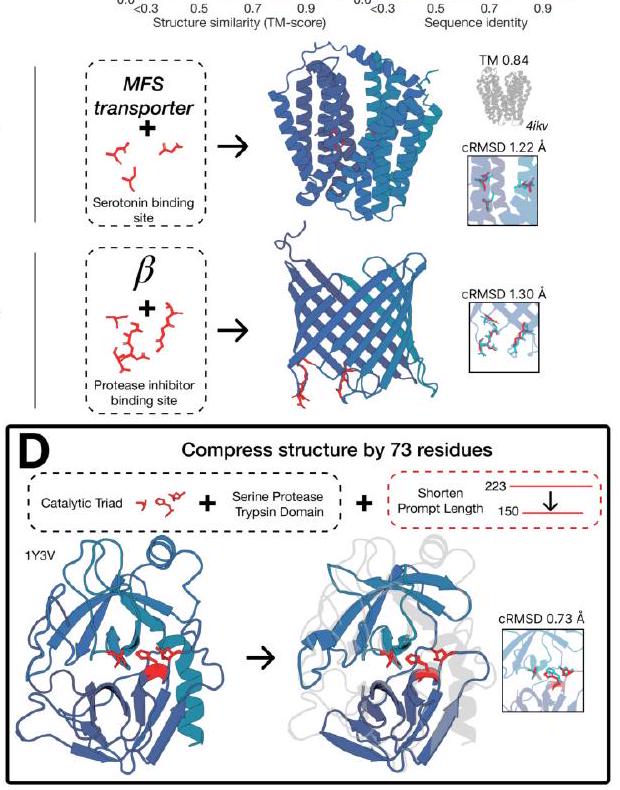

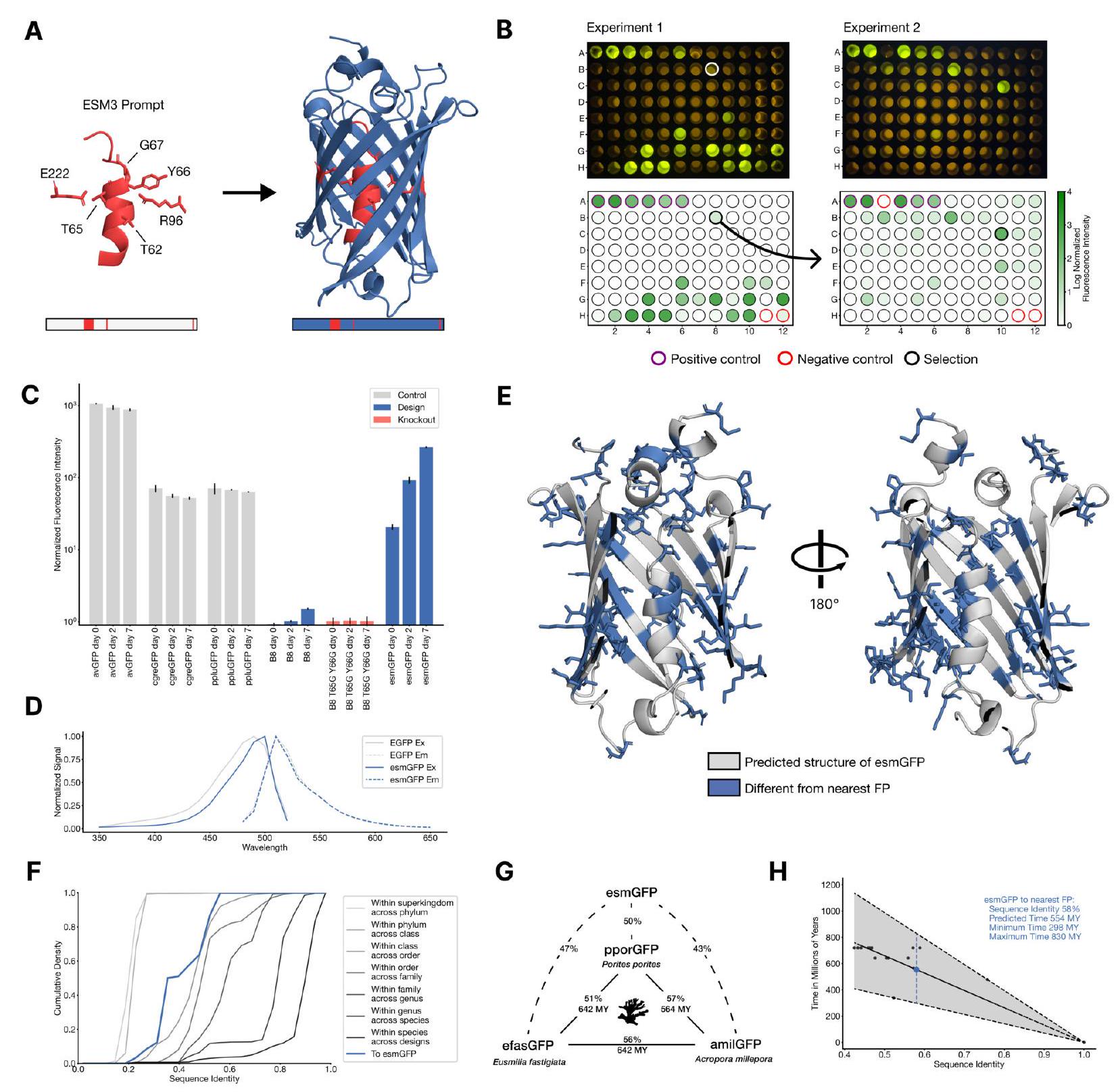

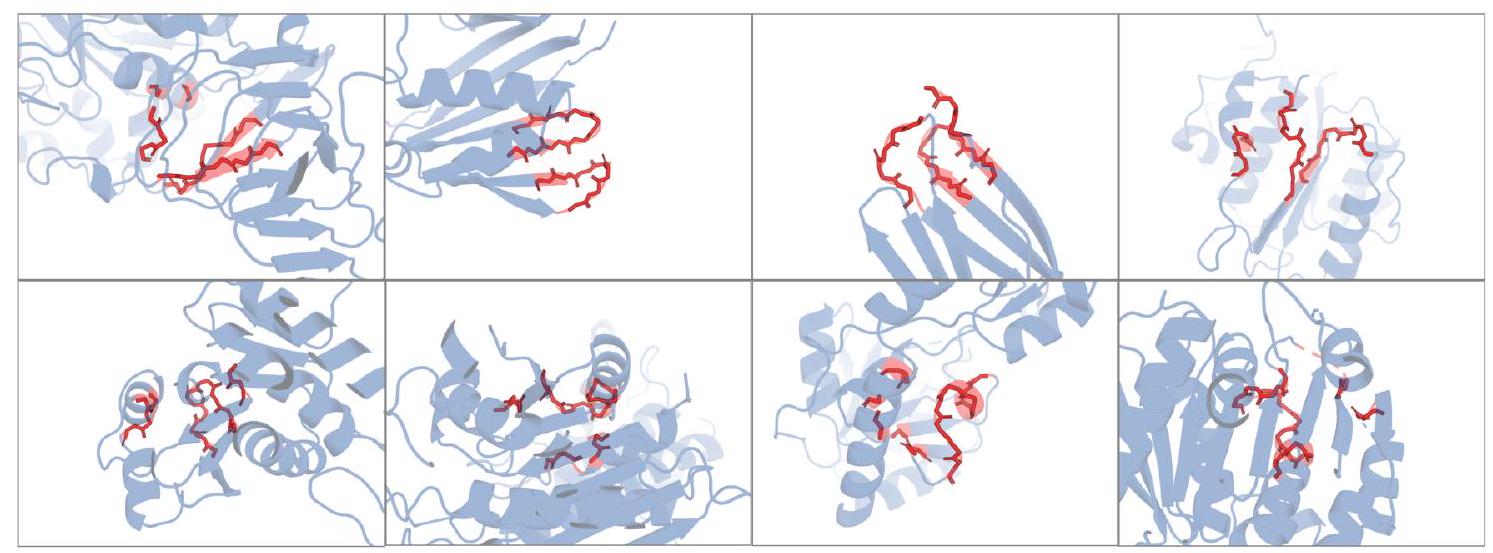

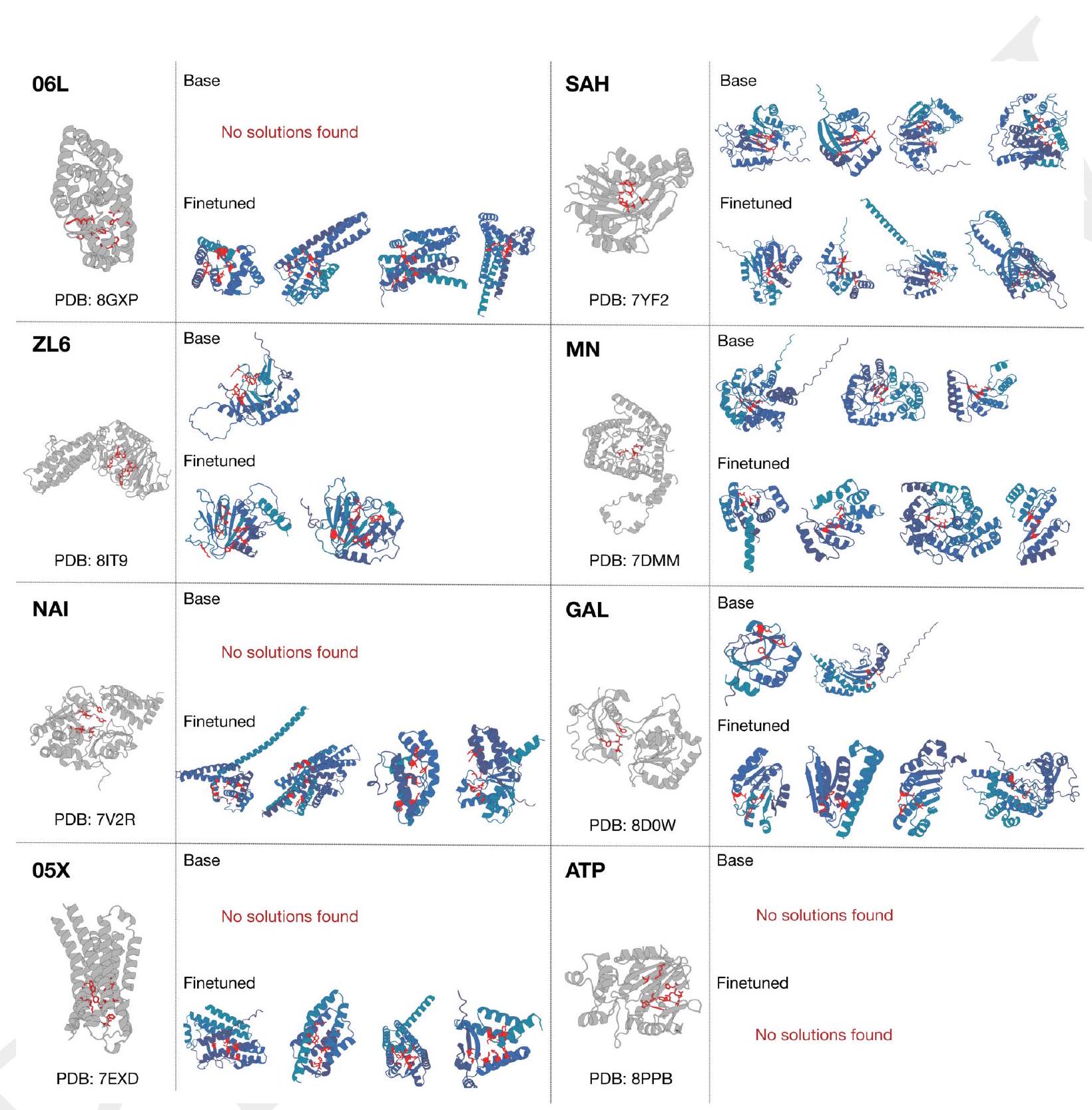

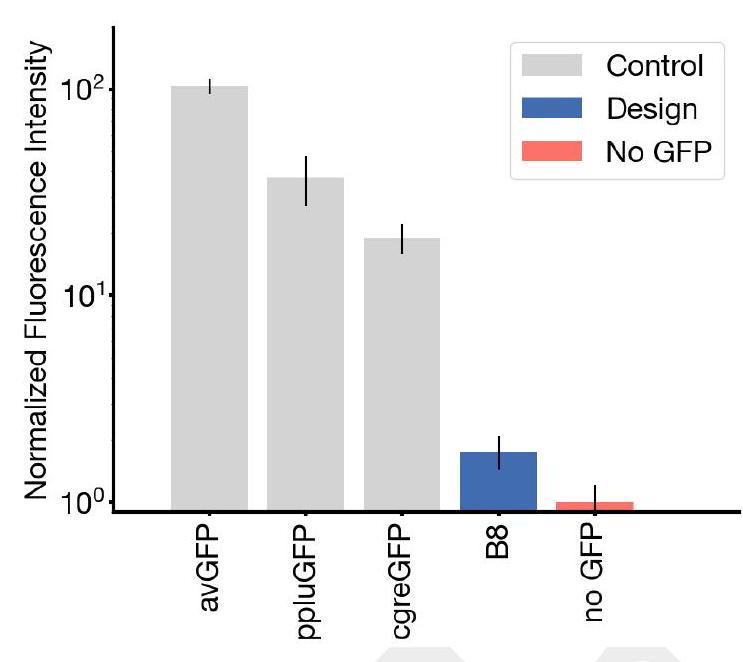

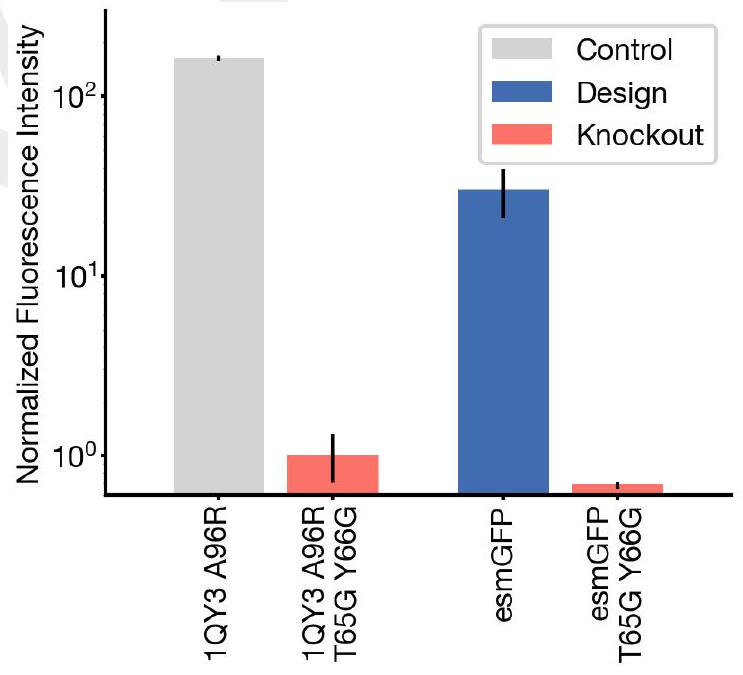

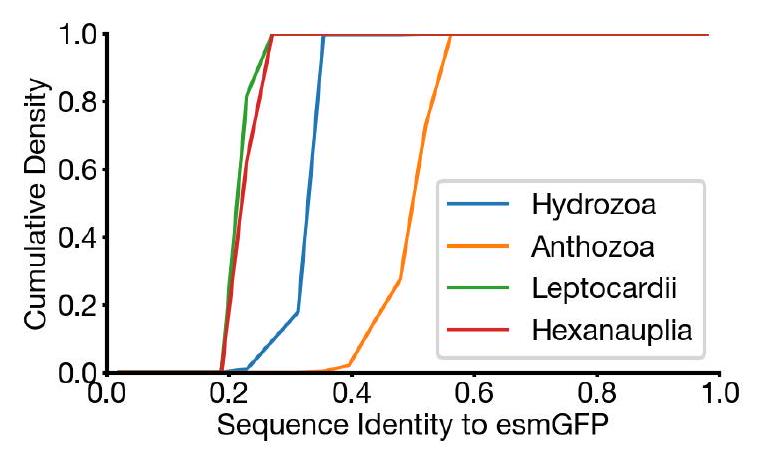

Figure 4. Generating a new fluorescent protein with a chain of thought. (A) We prompt ESM3 with the sequence and structure of residues required for forming and catalyzing the chromophore reaction, as well as the structure of part of the central alpha helix from a natural fluorescent protein (left). Through a chain of thought, ESM3 generates design candidates (right). (B) ESM3 found a bright GFP distant from other known GFPs in two experiments. We measured fluorescence in E. coli lysate. Top row, photograph of plates. Bottom row, plate reader fluorescence quantification. Positive controls of known GFPs are marked with purple circles, negative controls with no GFP sequence or no E. Coli are marked with red circles. In the first experiment (left) we expressed designs with a range of sequence identities. A notable design with low sequence identity to known fluorescent proteins appears in the well labeled B8 (highlighted in a black circle bottom, white circle top). We continue the chain of thought from the protein in B8 for the second experiment (right). A bright design appears in the well labeled C10 (black circle bottom, white circle top) which we designate esmGFP. (C) esmGFP exhibits fluorescence intensity similar to common GFPs. Normalized fluorescence is shown for a subset of proteins in experiment 2. (D) Excitation and emission spectra for esmGFP overlaid on the spectra of EGFP. (E) Two cutout views of the central alpha helix and the inside of the beta barrel of a predicted structure of esmGFP. The 96 mutations esmGFP has relative to its nearest neighbor, tagRFP, are shown in blue. (F) Cumulative density of sequence identity between fluorescent proteins across taxa. esmGFP has the level of similarity to all other FPs that is typically found when comparing sequences across orders, but within the same class. (G) Evolutionary distance by time in millions of years (MY) and sequence identities for three example anthozoa GFPs and esmGFP. (H) Estimator of evolutionary distance by time (MY) from GFP sequence identity. We estimate esmGFP is over 500 million years of natural evolution removed from the closest known protein.

In summary, the process of generating a new fluorescent protein involves using ESM3 to prompt the sequence and structure of residues required for forming and catalyzing the chromophore reaction, as well as the structure of part of the central alpha helix from a natural fluorescent protein. ESM3 then generates design candidates through a chain of thought. The resulting designs are tested for fluorescence in E. coli lysate, and the brightest design is selected for further analysis. The new fluorescent protein, designated esmGFP, exhibits fluorescence intensity similar to common GFPs and has a level of similarity to all other FPs that is typically found when comparing sequences across orders, but within the same class. The evolutionary distance of esmGFP is estimated to be over 500 million years of natural evolution removed from the closest known protein.

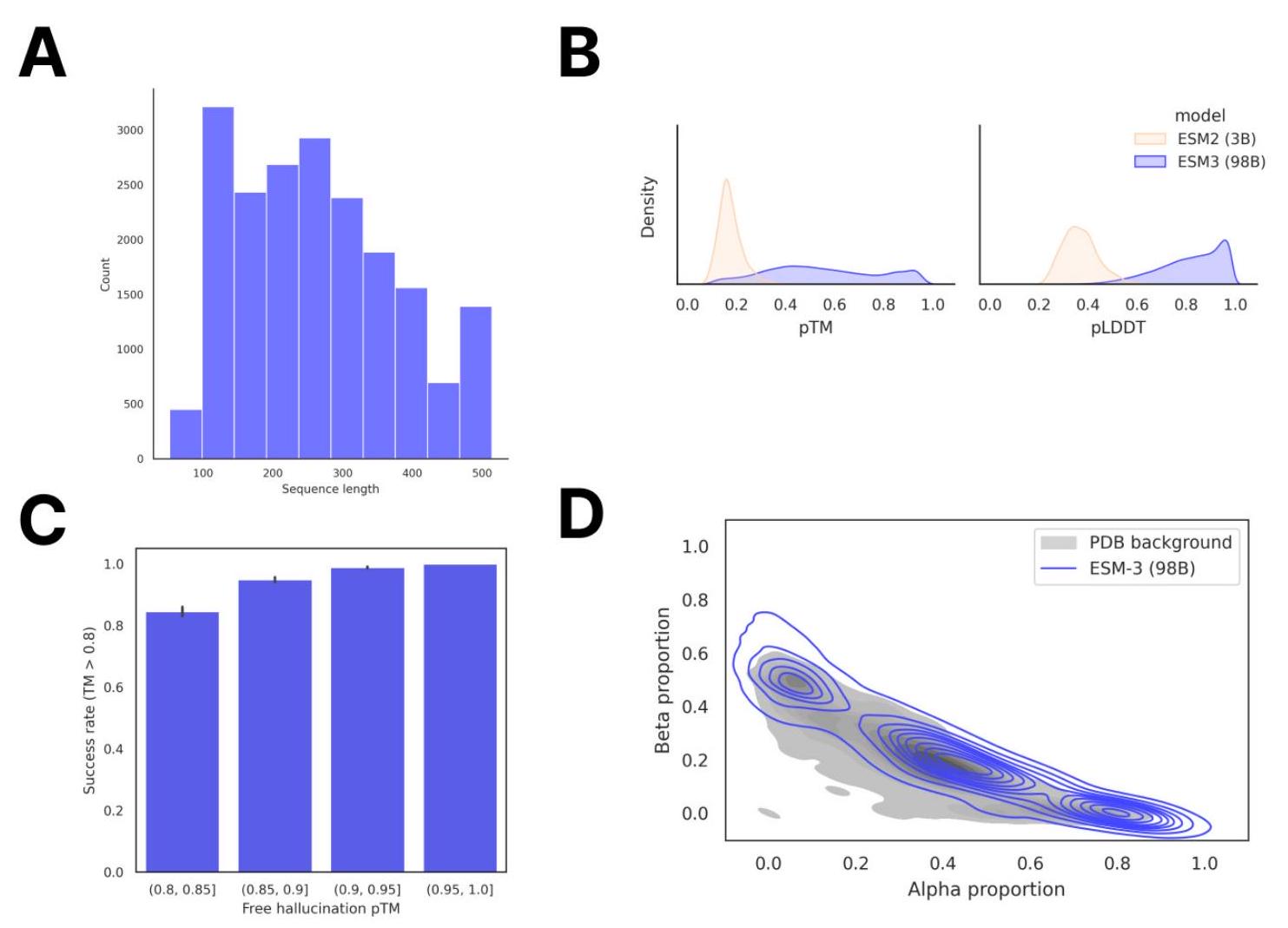

In order to create new GFP sequences, we are using a pre-trained model called ESM3 with 7B parameters. We are directly prompting this model to generate a protein sequence that is 229 residues long, and we are conditioning it on certain critical residues that are involved in the formation and catalysis of the chromophore reaction. These residues are Thr62, Thr65, Tyr66, Gly67, Arg96, and Glu222. Additionally, we are also conditioning the model on the structure of residues 58 through 71, which are known to be important for the energetic favorability of chromophore formation. To do this, we provide sequence tokens, structure tokens, and atomic coordinates of the backbone as input, and the generation process begins from a nearly completely masked array of tokens corresponding to 229 residues, except for the token positions used for conditioning.

The process of generating designs using a chain-of-thought procedure involves several steps. First, the model generates structure tokens, which create a protein backbone. These backbones are then filtered based on their atomic coordination of the active site and overall structure differentiation from the 1QY3 backbone. The generated structure is then added to the original prompt to generate a sequence conditioned on the new prompt.

Next, an iterative joint optimization is performed, alternating between optimizing the sequence and the structure. During this process, chains-of-thought that lose atomic coordination of the active site are rejected.

Finally, a computational pool of thousands of candidate GFP designs is drawn from the intermediate and final points in the iterative joint optimization stage. These designs are then bucketed by sequence similarity to known fluorescent proteins and filtered and ranked using a variety of metrics.

Overall, this process allows for the generation of novel GFP designs that are optimized for their atomic coordination and overall structure, while also being filtered and ranked based on their similarity to known fluorescent proteins.

In this experiment, the researchers synthesized and expressed 88 different protein designs in E. coli, each with varying levels of sequence similarity to naturally occurring GFPs. They then measured the fluorescence activity of each protein at an excitation wavelength of 485 nm. The results showed that some of the designs had higher sequence identity with naturally occurring GFPs and had similar brightness levels. However, one design in well B8 had only 36% sequence identity to the 1QY3 sequence and 57% sequence identity to the nearest existing fluorescent protein, tagRFP. This design was 50x less bright than natural GFPs and took a week to mature its chromophore, but it still showed a signal of function in a new portion of sequence space that has not been found in nature or through protein engineering.

The process of generating a protein with improved brightness involves creating a sequence of designs in well B8 and using an iterative joint optimization and ranking procedure to improve the design. This is followed by creating a second 96 well plate of designs and using a plate reader assay to find several designs with a brightness in the range of GFPs found in nature. The best design, located in well C10 of the second plate, is designated as esmGFP.

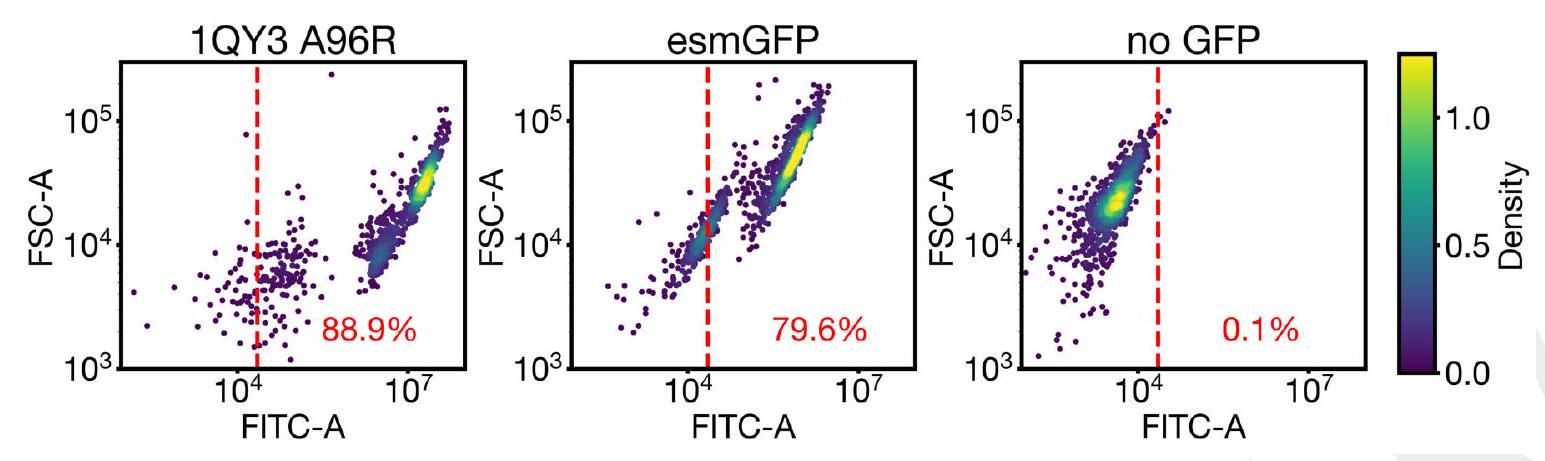

The study found that esmGFP, a variant of GFP, exhibits brightness comparable to natural GFPs after two days of chromophore maturation. The fluorescence intensity was measured at 0, 2, and 7 days for esmGFP, a replicate of B8, a chromophore knockout of B8, and three natural GFPs. The results showed that esmGFP takes longer to mature than the known GFPs, but achieves a comparable brightness after two days. To confirm that the fluorescence was mediated by the intended Thr65 and Tyr66, the researchers mutated these residues to glycine in B8 and esmGFP variants, resulting in the loss of fluorescence activity.

The excitation and emission spectra of esmGFP were analyzed and compared to those of EGFP. The peak excitation of esmGFP occurs at 496 nm, which is shifted by 7 nm compared to EGFP's peak at 489 nm. However, both proteins emit at a peak of 512 nm. The FWHM of the excitation spectrum of esmGFP is narrower at 39 nm compared to EGFP's FWHM of 56 nm. On the other hand, the FWHM of their emission spectra are highly comparable at 35 nm and 39 nm, respectively. Overall, esmGFP exhibits spectral properties that are consistent with known GFPs.

The researchers conducted a BLAST and MMseqs search to compare the sequence and structure of esmGFP to known proteins. The top hit was tagRFP, which is a designed variant with 58% sequence identity to esmGFP. The closest wildtype sequence to esmGFP is eqFP578, a red fluorescent protein, which differs from esmGFP by 107 sequence positions (53% identity). The sequence differences between esmGFP and tagRFP occur throughout the structure, with 22 mutations occurring in the protein's interior, which is known to be sensitive to mutations due to chromophore proximity and a high density of interactions.

Examination of a sequence alignment of 648 natural and designed GFP-like fluorescent proteins revealed that esmGFP

The study analyzed a sequence alignment of 648 natural and designed GFP-like fluorescent proteins, and found that esmGFP has a level of similarity to other FPs that is typically found when comparing sequences across taxonomic orders within the same taxonomic class. This means that the difference between esmGFP and other FPs is similar to the level of difference between FPs belonging to different orders within the same class of marine invertebrates. The closest FPs to esmGFP come from the anthozoa class, with an average sequence identity of 51.4%, but esmGFP also shares some sequence identity with FPs from the hydrozoa class, with an average sequence identity of 33.4%.

The study used a time-calibrated phylogenetic analysis of Anthozoans to estimate the evolutionary time between different species. They then used this information to construct a simple estimator that correlates sequence identity between fluorescent proteins (FPs) to the millions of years of evolutionary time between the species. By using this estimator, they were able to estimate that esmGFP represents an equivalent of over 500 million years of evolution from the closest protein that has been found in nature.

As an expert in the field, you may be interested to know that recent research has shown that language models can be used to explore a much wider range of protein designs than what has been discovered through natural evolution. These models are able to generate functional proteins that would have taken evolution hundreds of millions of years to discover.

The language models do not rely on the physical constraints of evolution, but instead use a more abstract approach to construct a model of the many potential paths that evolution could have taken. This allows for a much broader exploration of protein design space, and could potentially lead to the discovery of new and useful proteins that have not yet been found through traditional evolutionary processes.

Proteins are located within a structured space where each protein is surrounded by other proteins that are only one mutational event away. This means that the evolution of proteins can be visualized as a network within this space, connecting all proteins through the paths that evolution can take between them. These paths represent the ways in which one protein can transform into another without negatively impacting the overall function of the system. Essentially, the network of protein evolution is a map of the possible paths that proteins can take as they evolve and adapt to changing environments.

In simpler terms, a language model is being used to analyze and understand the structure of proteins. The model sees proteins as occupying a certain space, with some areas being more densely populated than others. By analyzing this space, the model can identify which parts of the protein are more accessible to evolution.

To predict the next token in a protein sequence, the language model must understand how evolution moves through the space of possible proteins. This requires the model to learn what factors determine whether a particular path through the protein space is feasible for evolution.

Simulations are essentially computer-based representations of real-world scenarios or phenomena. They are designed to mimic the behavior of a system or process in a virtual environment, allowing researchers to study and analyze it without the need for physical experimentation.

In the context of the given text, the language model being referred to is a type of simulation that has been trained to predict possible outcomes of evolution. This model, known as ESM3, is an emergent simulator that has been learned from solving a token prediction task on data generated by evolution.

The idea behind this approach is that neural networks, which are used to train the model, are capable of discovering the underlying structure of the data they are trained on. By solving the token prediction task, the model is forced to learn the deep structure that determines which steps evolution can take, effectively simulating the fundamental biology of proteins.

In the process of generating a new fluorescent protein, ESM3's first chain of thought to B8 is particularly interesting. This is because B8 is located at a distance of 96 mutations from its closest neighbor, which means that there are an astronomical number of possible proteins that could be created. However, only a very small fraction of these proteins would actually be functional, as fluorescence decreases rapidly even with just a few random mutations. The fact that there are other bright designs in the vicinity of B8, such as C10, suggests that ESM3 has discovered a new area of protein space that is dense with fluorescent proteins, despite not having been explored by nature.

The authors of the text are expressing their gratitude towards various individuals and teams who have provided support and feedback during the development of ESM3-open. They specifically thank Eric Schreiter, Karel Svoboda, and Srinivas Turaga for their feedback on the properties of esmGFP, Marko Iskander, Vishvajit Kher, and the Andromeda cluster team for their support on compute infrastructure, and April Pawluk for her assistance with manuscript preparation. Additionally, the authors acknowledge the experts who provided feedback on their approach to responsible development and those who participated in the review of the risks and benefits of releasing ESM3-open.

Open Model and Responsible Development is a concept that emphasizes the importance of transparency, collaboration, and ethical considerations in the development of models and technologies. It involves making the development process open to the public, allowing for input and feedback from a diverse range of stakeholders. This approach ensures that the models and technologies developed are not only effective but also responsible and ethical.

J.G. and I.S. are likely experts in the field of model and technology development who are advocating for the adoption of this approach. They may be involved in research, policy-making, or industry practices related to responsible development.

I do not have personal opinions or beliefs, but i can provide you with an explanation of the term "competing interests."

competing interests refer to situations where an individual or organization has multiple interests that may conflict with each other. this can occur in various contexts, such as in research, business, or politics. for example, a researcher may have a financial interest in a company that produces a product they are studying, which could potentially influence their findings. in such cases, it is important to disclose any competing interests to ensure transparency and avoid any potential biases.

The authors listed are employees of EvolutionaryScale, PBC, except for P.D.H. who is a cofounder of Stylus Medicine, Circle Labs, and Spotlight Therapeutics, and serves on the board of directors at Stylus Medicine. P.D.H. is also a board observer at EvolutionaryScale, Circle Labs, and Spotlight Therapeutics, a scientific advisory board member at Arbor Biosciences and Veda Bio, and an advisor to NFDG, Varda Space, and Vial Health. Additionally, patents have been filed related to aspects of this work.

Certainly! The "MODEL AND DATA AVAILABILITY" section typically appears in research papers or reports that involve the use of a specific model or dataset. It provides information about the model or dataset used in the study, including where it can be accessed or obtained.

For example, if the study used a machine learning model, the section might include details about the type of model used, the parameters used to train the model, and any preprocessing steps that were taken. It might also include information about where the model can be downloaded or accessed, such as a GitHub repository or a website.

Similarly, if the study used a specific dataset, the section might include details about the dataset, such as its size, the variables included, and any preprocessing steps that were taken. It might also include information about where the dataset can be downloaded or accessed, such as a data repository or a website.

The ESM3-open model is a tool that has been developed for academic research purposes. It has been reviewed by a committee of technical experts who have determined that the benefits of releasing the model far outweigh any potential risks. The model will be made available via API with a free access tier for academic research. Additionally, the sequence of esmGFP, along with other GFPs generated for this work, has been committed to the public domain. Plasmids for esmGFP-C10 and esmGFP-B8 will also be made available.

Certainly! In academic writing, the "References" section is typically included at the end of a paper or document. It provides a list of all the sources that were cited or referenced within the text. The purpose of this section is to give credit to the original authors and to allow readers to locate and access the sources for further reading or research.

The format of the References section can vary depending on the citation style being used (e.g. APA, MLA, Chicago), but it typically includes the author's name, the title of the source, the publication date, and other relevant information such as the publisher or journal name.

It's important to note that the References section should only include sources that were actually cited or referenced within the text. It's not a place to list all the sources that were consulted during the research process.

The article "MGnify: the microbiome analysis resource in 2020" discusses the development and capabilities of the MGnify platform, which is a resource for analyzing microbiome data. The authors describe the various tools and features available on the platform, including taxonomic classification, functional annotation, and metagenome assembly. They also discuss the use of MGnify in various research studies, such as the analysis of gut microbiomes in patients with inflammatory bowel disease. Overall, the article provides a comprehensive overview of the MGnify platform and its potential applications in microbiome research.

The article "AlphaFold Protein Structure Database in 2024: providing structure coverage for over 214 million protein sequences" discusses the development of a database that provides structural information for over 214 million protein sequences. The database is called the AlphaFold Protein Structure Database and it was created using a deep learning algorithm called AlphaFold. The article explains how the database was created and how it can be used by researchers to better understand protein structures and their functions. The authors also discuss the potential impact of this database on the field of structural biology and drug discovery.

The article discusses the use of unsupervised learning to analyze a large dataset of protein sequences. The researchers developed a new algorithm that can scale to 250 million protein sequences, allowing them to identify patterns and structures in the data that were previously unknown. The study has implications for understanding the biological function of proteins and could lead to new discoveries in the field of biochemistry.

[9] Noelia Ferruz, Steffen Schmidt, and Birte Höcker. ProtGPT2 is a deep unsupervised language model

ProtGPT2 is a deep unsupervised language model that has been developed for protein design. It is a type of artificial intelligence that uses natural language processing to analyze and generate protein sequences. The model is based on the Generative Pre-trained Transformer 2 (GPT-2) architecture, which is a neural network that can generate text based on a given prompt. ProtGPT2 has been trained on a large dataset of protein sequences and can be used to generate new protein sequences that are optimized for specific functions or properties. This technology has the potential to revolutionize the field of protein engineering and could lead to the development of new drugs and therapies.

The paper titled "Language models generalize beyond natural proteins" by Robert Verkuil, Ori Kabeli, Yilun Du, Basile IM Wicky, Lukas F Milles, Justas Dauparas, David Baker, Sergey Ovchinnikov, Tom Sercu, and Alexander Rives discusses the use of language models in predicting protein structures. The authors propose a new approach that uses language models to predict protein structures, which can generalize beyond natural proteins. The paper presents a detailed analysis of the performance of the proposed approach and compares it with existing methods. The authors conclude that the proposed approach has the potential to significantly improve the accuracy of protein structure prediction and can be used to design new proteins with specific functions.

The article "ProtTrans: Towards Cracking the Language of Life's Code Through Self-Supervised Deep Learning and High Performance Computing" discusses a new approach to understanding the language of life's code through self-supervised deep learning and high performance computing. The authors propose a new method called ProtTrans, which uses deep learning algorithms to analyze protein sequences and predict their structures. The approach is based on self-supervised learning, which means that the algorithm learns from the data itself without the need for labeled data. The authors also use high performance computing to speed up the training process and improve the accuracy of the predictions. The article is published in the IEEE Transactions on Pattern Analysis and Machine Intelligence and is authored by a team of researchers from Oak Ridge National Lab and other institutions.

The paper titled "RITA: a Study on Scaling Up Generative Protein Sequence Models" by Daniel Hesslow, Niccoló Zanichelli, Pascal Notin, Iacopo Poli, and Debora Marks discusses the development of a new generative protein sequence model called RITA. The authors aim to improve the scalability of existing models by introducing a new architecture that can handle large datasets and generate high-quality protein sequences. The paper presents a detailed analysis of the performance of RITA on various benchmark datasets and compares it with other state-of-the-art models. The authors also discuss the potential applications of RITA in drug discovery and protein engineering. Overall, the paper provides a valuable contribution to the field of protein sequence modeling and offers a promising new approach for generating high-quality protein sequences.

The paper titled "Protein generation with evolutionary diffusion: sequence is all you need" by Sarah Alamdari, Nitya Thakkar, Rianne van den Berg, Alex Xijie Lu, Nicolo Fusi, Ava Pardis Amini, and Kevin K Yang proposes a new method for generating protein sequences using evolutionary diffusion. The authors argue that current methods for generating protein sequences are limited by their reliance on pre-existing protein structures, which can be biased and may not accurately reflect the diversity of protein sequences in nature.

The proposed method, called "evolutionary diffusion," uses a generative model that learns to generate protein sequences by simulating the process of evolution. The model starts with a set of initial protein sequences and iteratively generates new sequences by introducing mutations and selecting the most fit sequences based on a fitness function. The fitness function is designed to capture the structural and functional properties of proteins, such as their stability, solubility, and binding affinity.

The authors evaluate the performance of their method on several benchmark datasets and show that it outperforms existing methods in terms of generating diverse and functional protein sequences. They also demonstrate the potential of their method for discovering new protein structures and functions.

Overall, the paper presents a promising new approach for generating protein sequences that could have important implications for protein engineering and drug discovery.

The article "Modeling aspects of the language of life through transfer-learning protein sequences" by Michael Heinzinger, Ahmed Elnaggar, Yu Wang, Christian Dallago, Dmitrii Nechaev, Florian Matthes, and Burkhard Rost discusses the use of transfer learning to model the language of life through protein sequences. The authors propose a novel approach to transfer learning that involves using a pre-trained language model to extract features from protein sequences, which are then used to train a downstream model for a specific task.

The authors evaluate their approach on several tasks, including protein classification, protein-protein interaction prediction, and protein-DNA interaction prediction. They show that their approach outperforms existing methods on these tasks, demonstrating the effectiveness of transfer learning for modeling the language of life through protein sequences.

[17] Roshan Rao, Joshua Meier, Tom Sercu, Sergey Ovchinnikov, and Alexander Rives. Transformer protein language models are unsupervised structure learners. In International Conference on Learning Representations, page 2020.12.15.422761. Cold

The paper titled "Transformer protein language models are unsupervised structure learners" by Roshan Rao, Joshua Meier, Tom Sercu, Sergey Ovchinnikov, and Alexander Rives was presented at the International Conference on Learning Representations in December 2021. The paper discusses the use of transformer protein language models as unsupervised structure learners. The authors propose a new approach to protein structure prediction that leverages the power of transformer models to learn the underlying structure of proteins without the need for labeled data. The paper presents experimental results that demonstrate the effectiveness of the proposed approach in predicting protein structures. The paper is available on the Cold Spring Harbor Laboratory website and can be accessed using the doi $10.1101 / 2020.12 .15 .422761$.

The paper "xtrimopglm: Unified $100 b$-scale pre-trained transformer for deciphering the language of protein" proposes a new pre-trained transformer model called xtrimopglm, which is designed to understand the language of proteins. The model is trained on a large dataset of protein sequences and is capable of predicting protein properties such as secondary structure, solvent accessibility, and contact maps. The authors claim that xtrimopglm outperforms existing state-of-the-art models in these tasks. The paper is currently available on the preprint server bioRxiv and has not yet been peer-reviewed.

The paper titled "Language Models are FewShot Learners" by Tom B. Brown and his team of researchers explores the idea that language models, specifically those based on neural networks, have the ability to learn new tasks with very little training data. This is known as few-shot learning, and it is a highly sought-after capability in the field of machine learning.

The paper presents a series of experiments that demonstrate the effectiveness of few-shot learning in language models. The researchers use a variety of tasks, such as question answering and sentiment analysis, to show that their models can achieve high accuracy with just a few examples of each task.

The paper also discusses the implications of this research for natural language processing and machine learning more broadly. The authors argue that few-shot learning could be a key component of future AI systems, allowing them to adapt quickly to new tasks and environments.

Overall, the paper is a significant contribution to the field of machine learning and provides valuable insights into the capabilities of language models.

The paper "Training ComputeOptimal Large Language Models" discusses a new approach to training large language models that is more computationally efficient than previous methods. The authors propose a technique called "compute-optimal training," which involves optimizing the training process to minimize the amount of computation required while still achieving high accuracy. This is achieved by using a combination of techniques such as dynamic batching, gradient checkpointing, and adaptive learning rate scheduling. The authors demonstrate the effectiveness of their approach on several large-scale language modeling tasks, including the WikiText-103 and C4 benchmarks. Overall, the paper presents an innovative approach to training large language models that could have significant implications for the field of natural language processing.

I'm sorry, but without any context or information about what you are referring to, I cannot provide an explanation. Please provide more details or a specific topic for me to assist you with.

The article "Accurate structure prediction of biomolecular interactions with AlphaFold 3" discusses the development of a new software called AlphaFold 3, which is capable of accurately predicting the structure of biomolecules and their interactions. The authors of the article are a team of researchers from various institutions, including DeepMind, the University of Cambridge, and the University of Oxford.

The article explains that AlphaFold 3 uses a combination of deep learning and physics-based modeling to predict the structure of biomolecules. The software is trained on a large dataset of known protein structures and is able to accurately predict the structure of new proteins based on their amino acid sequence.

The authors also discuss the potential applications of AlphaFold 3 in drug discovery and protein engineering. By accurately predicting the structure of biomolecules, researchers can better understand how they interact with drugs and other molecules, which can lead to the development of more effective treatments for diseases.

Overall, the article highlights the importance of accurate structure prediction in the field of biomolecular research and the potential impact of AlphaFold 3 on this field.

The article "De novo design of protein structure and function with RFdiffusion" discusses a new method for designing proteins from scratch, called RFdiffusion. The authors, led by David Baker, used this method to design several new proteins with specific functions, such as binding to a particular target molecule or catalyzing a specific chemical reaction. The RFdiffusion method involves using machine learning algorithms to predict the structure and function of a protein based on its amino acid sequence, and then iteratively refining the design until it meets the desired specifications. The authors believe that this method could have important applications in fields such as drug discovery and biotechnology.

The article "Illuminating protein space with a programmable generative model" discusses the development of a new computational tool that can predict the structure and function of proteins. The tool uses a generative model, which is a type of artificial intelligence algorithm that can create new data based on patterns it has learned from existing data. The authors of the article used this generative model to create a large dataset of protein structures and functions, which they then used to train the model to predict the properties of new proteins. The tool has the potential to greatly accelerate the discovery of new drugs and therapies, as well as to deepen our understanding of the role of proteins in biological processes.

The paper "Out of many, one: Designing and scaffolding proteins at the scale of the structural universe with genie 2" by Yeqing Lin, Minji Lee, Zhao Zhang, and Mohammed AlQuraishi proposes a new method for designing and scaffolding proteins using a software called Genie 2. The authors argue that their approach can be used to create proteins with specific functions and properties, which could have important applications in fields such as medicine and biotechnology.

The paper begins by discussing the challenges of designing proteins from scratch, noting that the vast number of possible amino acid sequences makes it difficult to predict the structure and function of a given protein. The authors then introduce Genie 2, a software that uses machine learning algorithms to predict the structure and stability of proteins based on their amino acid sequence.

The authors then describe how they used Genie 2 to design and scaffold proteins with specific properties, such as high stability and the ability to bind to specific molecules. They also discuss how they were able to use the software to optimize the design of existing proteins, improving their stability and function.

The article "The Green Fluorescent Protein" by Roger Y. Tsien, published in the Annual Review of Biochemistry in 1998, discusses the discovery and properties of the green fluorescent protein (GFP). GFP is a protein that emits green light when exposed to ultraviolet or blue light, and it has become a widely used tool in biological research for labeling and tracking cells and proteins.

The article begins by providing a brief history of the discovery of GFP, which was first isolated from the jellyfish Aequorea victoria in the 1960s. Tsien then goes on to describe the structure and function of GFP, including its chromophore, which is responsible for its fluorescence. He also discusses the various ways in which GFP has been modified and engineered to improve its properties, such as increasing its brightness or changing its color.

The article then delves into the many applications of GFP in biological research, including its use as a reporter gene, a protein tag, and a tool for imaging cells and tissues. Tsien provides numerous examples of how GFP has been used in different research contexts, such as studying gene expression, tracking protein localization, and monitoring cell signaling.

The paper "Maskgit: Masked Generative Image Transformer" by Huiwen Chang, Han Zhang, Lu Jiang, Ce Liu, and William T. Freeman proposes a new approach to generative image modeling using a transformer architecture with masked attention. The authors introduce a novel masked generative image transformer (MaskGIT) that can generate high-quality images with a high degree of control over the content and style of the generated images.

The MaskGIT model consists of an encoder and a decoder, both of which are based on the transformer architecture. The encoder takes an input image and generates a set of feature maps, which are then passed through a series of masked attention layers. The decoder then takes these feature maps and generates a new image by applying a series of convolutions and upsampling operations.

The key innovation of MaskGIT is the use of masked attention, which allows the model to selectively attend to different parts of the input image during the encoding process. This enables the model to generate images with specific content and style features, such as changing the color of an object or adding a new object to the scene.

The authors evaluate the performance of MaskGIT on several benchmark datasets, including CIFAR-10, ImageNet, and COCO. They show that MaskGIT outperforms existing generative image models in terms of both image quality and control over content and style.

The paper presents a new method for density estimation, which is a fundamental problem in machine learning and statistics. The proposed method is based on a deep neural network architecture that is designed to be both accurate and interpretable. The authors show that their method outperforms existing state-of-the-art methods on a variety of benchmark datasets, while also being computationally efficient and easy to implement. The paper is relevant to experts in machine learning and statistics who are interested in density estimation and deep learning.

The paper "Neural discrete representation learning" by Aaron van den Oord, Oriol Vinyals, and Koray Kavukcuoglu proposes a new approach to learning discrete representations using neural networks. The authors argue that existing methods for learning discrete representations, such as vector quantization and clustering, have limitations in terms of scalability and the ability to capture complex relationships between data points.

The proposed approach, called Vector Quantized-Variational Autoencoder (VQ-VAE), combines the benefits of vector quantization and variational autoencoders. The VQ-VAE consists of an encoder network that maps input data to a continuous latent space, and a decoder network that generates a discrete representation of the input data from the latent space. The encoder network is trained to minimize the reconstruction error between the input data and the generated discrete representation, while the decoder network is trained to maximize the likelihood of the generated discrete representation given the input data.

The authors evaluate the VQ-VAE on several benchmark datasets, including image classification and language modeling tasks. They show that the VQ-VAE outperforms existing methods in terms of both accuracy and efficiency, and is able to learn more complex and meaningful representations of the data.

The paper "FlashAttention: Fast and Memory-Efficient Exact Attention with IOAwareness" proposes a new approach to attention mechanisms in deep learning models. The authors introduce a technique called "FlashAttention" that is designed to be both fast and memory-efficient, while still achieving high accuracy.

The key idea behind FlashAttention is to use a combination of hashing and IO-aware scheduling to reduce the amount of memory required for attention computations. The authors show that this approach can achieve significant speedups and memory savings compared to traditional attention mechanisms, without sacrificing accuracy.

Overall, the paper presents an interesting and promising new approach to attention in deep learning, and could have important implications for improving the efficiency and scalability of these models.

The paper "UniRef clusters: a comprehensive and scalable alternative for improving sequence similarity searches" by Baris E Suzek, Yuqi Wang, Hongzhan Huang, Peter B McGarvey, Cathy H Wu, and UniProt Consortium proposes a new approach to improve sequence similarity searches. The authors suggest using UniRef clusters, which are groups of related protein sequences that have been clustered together based on their sequence similarity. These clusters can be used as a more comprehensive and scalable alternative to traditional sequence similarity searches, which can be computationally expensive and may not always provide accurate results. The authors demonstrate the effectiveness of UniRef clusters in improving sequence similarity searches and suggest that this approach could be useful for a wide range of applications in bioinformatics.

The article "MGnify: the microbiome sequence data analysis resource in 2023" discusses a resource called MGnify, which is used for analyzing microbiome sequence data. The authors of the article are experts in the field of microbiome research and data analysis. The article was published in the journal Nucleic Acids Research in December 2022 and can be accessed online using the provided DOI.

The article "Observed antibody space: A diverse database of cleaned, annotated, and translated unpaired and paired antibody sequences" by Tobias H. Olsen, Fergus Boyles, and Charlotte M. Deane discusses the creation of a database of antibody sequences that have been cleaned, annotated, and translated. The database includes both unpaired and paired antibody sequences and is intended to be a diverse resource for researchers studying antibodies. The article provides details on the methods used to create the database and the types of sequences included. It also discusses the potential applications of the database in the field of immunology.

The article discusses the RCSB Protein Data Bank, which is a database that contains information about the structures of biological macromolecules. These structures are important for research in various fields, including fundamental biology, biomedicine, biotechnology, and energy. The article provides information about the database and its contributors, as well as its potential applications in research and education.

The article "InterPro in 2022" discusses the latest updates and improvements to the InterPro database, which is a comprehensive resource for protein families, domains, and functional sites. The article highlights the importance of InterPro in providing accurate and reliable annotations for protein sequences, and how it has evolved over the years to incorporate new data sources and analysis tools. The authors also discuss the challenges and future directions for InterPro, including the need for better integration with other databases and the development of new methods for predicting protein function. Overall, the article provides a valuable overview of the current state of InterPro and its role in advancing our understanding of protein structure and function.

The paper "Foldseek: fast and accurate protein structure search" by Michel van Kempen, Stephanie Kim, Charlotte Tumescheit, Milot Mirdita, Johannes Söding, and Martin Steinegger presents a new software tool called Foldseek, which is designed to quickly and accurately search for protein structures. The authors describe the algorithm used by Foldseek and demonstrate its effectiveness in comparison to other existing tools. The paper is currently available on the preprint server bioRxiv and has not yet been peer-reviewed.

The paper titled "Training language models to follow instructions with human feedback" proposes a new approach to training language models that can follow instructions given by humans. The authors suggest that instead of relying solely on large datasets of text, language models can be trained using human feedback to improve their ability to understand and respond to instructions.

The proposed approach involves a two-step process. First, the language model is trained on a large dataset of text to learn the general structure of language. Then, the model is fine-tuned using human feedback to improve its ability to follow specific instructions.

The authors conducted several experiments to evaluate the effectiveness of their approach. They found that language models trained using human feedback were better able to follow instructions than models trained solely on text data.

Overall, the paper presents a promising new approach to training language models that could have significant implications for natural language processing and artificial intelligence more broadly.

The paper "Direct Preference Optimization: Your Language Model is Secretly a Reward Model" proposes a new approach to optimizing language models based on direct preference feedback from users. The authors argue that traditional methods of training language models using large datasets may not always capture the specific preferences of individual users. Instead, they suggest using a reward model that can be trained directly on user feedback to optimize the language model's performance.